As Artificial Intelligence (AI) models continue to grow in complexity and scale, data centers face escalating challenges in heat management, with traditional air cooling proving increasingly inadequate and energy-intensive. Conventional facilities, once operating at 20 kW per rack, now support more than 135 kW per rack, making efficient cooling a critical concern for operational stability and cost control. The industry is shifting towards liquid cooling, a solution that directly captures and removes heat from the source, circumventing the inefficiency of circulating chilled air and greatly improving scalability and energy efficiency in next-generation AI infrastructure.

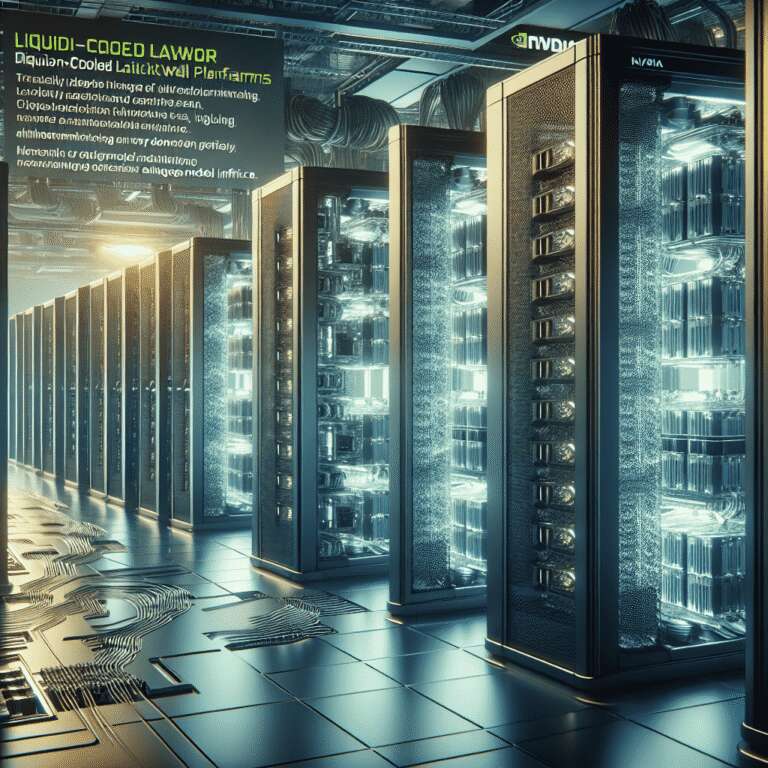

NVIDIA´s GB200 NVL72 and GB300 NVL72 systems, both built on the Blackwell platform, exemplify this trend by employing rack-scale, liquid-cooled architectures specifically designed for the demands of trillion-parameter language model inference and AI reasoning workloads. These platforms deliver substantial improvements over legacy systems: the GB200 NVL72 achieves 40 times the compute density, 30 times higher throughput, 25 times the energy efficiency, and over 300 times better water efficiency compared to traditional air-cooled servers. The GB300 NVL72 further increases these metrics, while effectively reducing the need for mechanical chillers—a historical culprit for up to 40% of a data center´s electricity use—thereby achieving significant operational cost savings and environmental benefits.

The transition to liquid cooling enables diverse cooling strategies tailored for evolving data center requirements, with options including mechanical chillers, evaporative cooling, dry coolers, and pumped refrigerant systems. Liquid cooling not only reduces reliance on energy- and water-intensive cooling systems but also supports higher operating water temperatures, enhancing flexibility in various climates while minimizing ecological impact. Innovations from industry leaders such as Vertiv, Schneider Electric, CoolIT Systems, and Boyd have resulted in improved energy consumption, increased rack density, and enhanced system reliability for high-performance AI workloads.

Looking toward the future, NVIDIA is spearheading sustainability initiatives like the COOLERCHIPS program, aiming to develop modular data centers with advanced cooling solutions capable of reducing cooling-related costs and environmental footprints even further. As AI continues to surge in computational requirements, the widespread adoption of liquid cooling and high-density architectures is emerging as an essential strategy for building sustainable, efficient, and future-proof AI data centers, ultimately supporting ongoing advancements in artificial intelligence and high-performance computing.