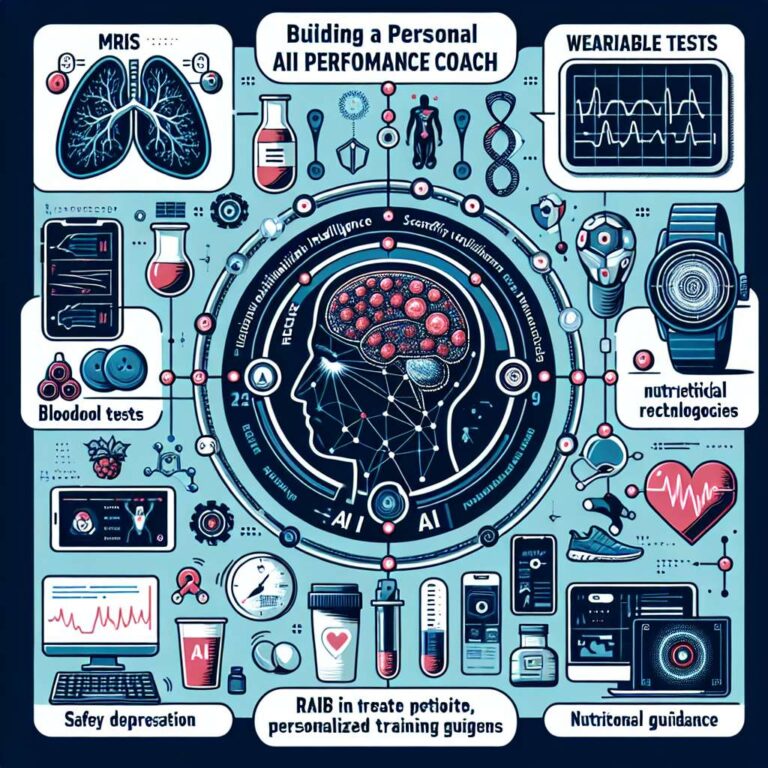

On the latest episode of How I AI, host Claire Vo interviews Lucas Werthein, the chief operating officer and co-founder of Cactus, about the personal Artificial Intelligence health coach he built. Lucas assembled a system that pulls together MRIs, blood tests, wearable data, nutrition plans and journal entries to produce actionable recommendations for training, recovery and injury avoidance. He emphasizes that the problem he faced was not collecting more data but synthesizing disparate sources into clear, personalized guidance.

The episode surfaces several practical lessons. First, data synthesis matters more than raw collection; Lucas’s coach connects siloed inputs to reveal patterns specialists missed. Second, the “last mile” of optimization can come from unexpected sources once data is integrated. Third, safety guardrails are essential; Lucas programs explicit boundaries and anti prompts so the Artificial Intelligence avoids pushing training volume past recovery limits, rejects unproven supplements and adheres to evidence based guidance. He also predicts that in five years most people will use a personal Artificial Intelligence coach between doctor visits and that patient and doctor systems will communicate via their respective Artificial Intelligence agents. Lucas expects health institutions will eventually package clinical knowledge into trained models, citing organizations like Mayo Clinic as likely candidates to commercialize expert models.

The newsletter also covers a second segment in which Claire describes building a Thanksgiving party hub with Lovable. She calls the approach “farm to table software” and demonstrates how combining Lovable for structure, Google Fonts for typography, Midjourney for imagery and ChatGPT prompts produces an intentional, handcrafted feel. Practical tips include restarting stalled requests in ChatGPT 5.1 and using curated Google font pairings such as Homemade Apple and Railway to uplift design. Episodes are available on YouTube, Spotify and Apple Podcasts and run roughly 30 to 45 minutes per guest demo.