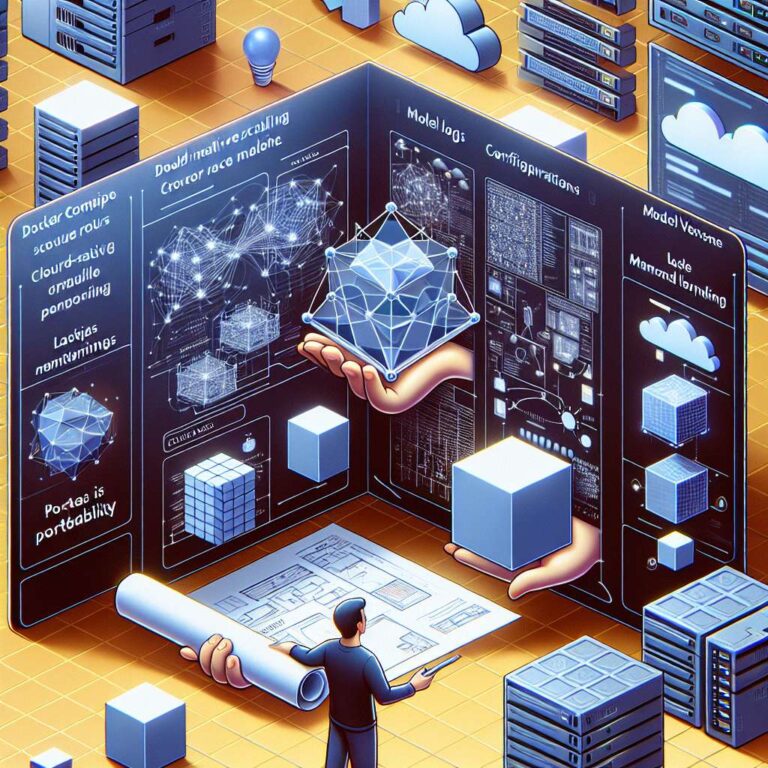

Docker Compose enables developers to define artificial intelligence models as first-class components within their application stacks. By introducing the models top-level element in the Compose file, teams can specify model dependencies alongside traditional service definitions. This architecture ensures that the required models are provisioned and managed in tandem with application services, which streamlines deployment and fosters portability across various environments supporting the Compose specification, such as Docker Model Runner and certain cloud providers.

Prerequisites for leveraging this feature include Docker Compose version 2.38 or later and a platform compatible with Compose models. Compose models are defined under the models section, allowing developers to reference model images, set configuration parameters such as context size, and pass runtime flags to the inference engine. Services can bind to models via short or long syntax. The short syntax facilitates automatic generation of environment variables (e.g., LLM_URL), while the long syntax offers custom variable naming for additional flexibility. Examples provided cover declaring language models and embedding models, as well as specifying cloud-optimized configurations using labels.

Platform portability is a defining aspect of Compose models. When running locally with Docker Model Runner, the defined models are pulled, executed, and the appropriate environment variables are injected into containers. On cloud platforms that support Compose models, the same configuration file can trigger the use of managed artificial intelligence services, benefit from cloud-specific scaling, and utilize enhanced monitoring, logging, and model versioning. This approach supports seamless migration between local and cloud environments and helps teams manage model access, configuration, and updates consistently. Further documentation is available for deep dives into syntax, advanced options, and integration best practices.