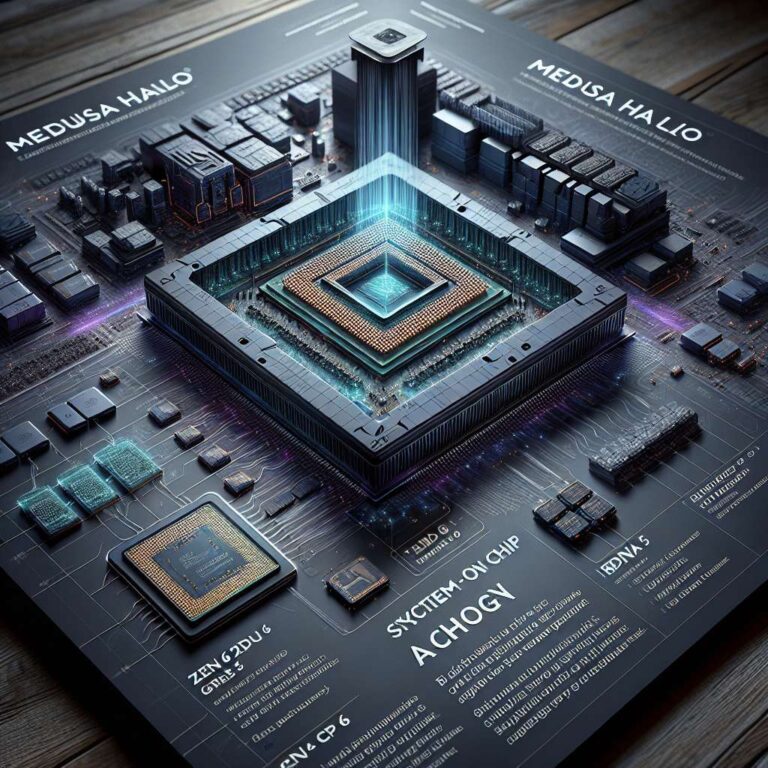

AMD’s next major refresh of Ryzen Artificial Intelligence MAX APUs, codenamed Medusa Halo, is expected to be among the first system-on-chips to adopt LPDDR6 memory. Leaked details indicate the silicon could feature a 384-bit bus powering LPDDR6 memory, which would translate into massive bandwidth for a new CPU and GPU configuration. The platform is rumored to scale up to 24 Zen 6 CPU cores alongside 48 RDNA 5 or UDNA compute units for the integrated graphics. With these specifications paired to the added bandwidth from LPDDR6 memory, Medusa Halo is positioned as one of the best-performing APUs in its class when it launches.

LPDDR6 is beginning to move from roadmap to validation, with memory manufacturers such as Samsung and Innosilicon already shipping modules to customers for testing. Innosilicon’s LPDDR6 modules boast an impressive speed of 14.4 Gbps, significantly faster than Samsung’s initial modules, which achieve 10.7 Gbps. Innosilicon’s modules offer a 1.5x increase in IO speed capability compared to the 9.6 Gbps of LPDDR5X previously available, along with improved efficiency. These gains underline why a bandwidth-hungry APU like Medusa Halo could benefit substantially from the new standard.

The LPDDR6 specification also brings architectural changes that further expand throughput. The latest LPDDR6 also increases the number of bits per byte of IO from 8 to 12. This results in LPDDR6’s bandwidth at a single-channel 24-bit I/O speed being double that of LPDDR5X at a 16-bit single-channel. To support expected demand, Innosilicon is reportedly collaborating with TSMC and Samsung to ensure sufficient production capacity for LPDDR6 IP, while Samsung is relying on its own fabs to manufacture LPDDR6 memory. Together, these developments set the stage for high-bandwidth APUs such as Medusa Halo to leverage LPDDR6 at volume.