Today’s computer vision systems are effective at detecting visual events but often lack explanatory context and forward-looking reasoning. Agentic Artificial Intelligence powered by vision language models (VLMs) can bridge that gap by translating pixels into rich, searchable metadata, verifying alerts with context and performing cross-modal reasoning across long video and sensor archives. The article outlines three practical approaches to augment existing convolutional neural network based pipelines without wholesale replacement.

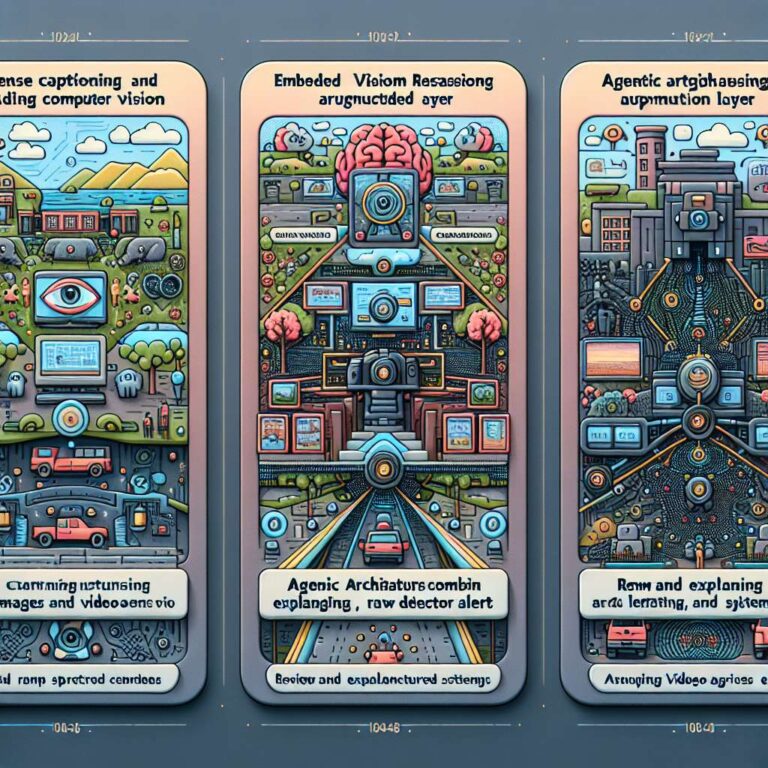

First, dense captioning turns unstructured images and video into detailed, searchable text. Embedding VLMs in applications produces metadata that supports flexible visual search beyond filenames or basic tags. Examples include UVeye, which processes over 700 million high-resolution images a month and uses VLMs to generate structured condition reports that improve defect detection, and Relo Metrics, which combines VLMs and computer vision to capture contextual sponsor impressions for real-time marketing value analysis. VLM-driven captions add transparency and support compliance, safety and quality control workflows.

Second, VLM reasoning can augment CNN alerting to reduce false positives and add actionable context. Rather than replacing existing detectors, VLMs can review and explain alerts, describing where, how and why incidents occurred. Linker Vision applies this approach to verify critical city alerts across more than 50,000 smart city camera streams, enabling coordinated cross-department responses for traffic, utilities and first responders and improving municipal incident management.

Third, agentic architectures that combine VLMs with large language models, retrieval-augmented generation, computer vision and speech transcription enable automatic analysis of complex, multichannel scenarios. Single-model token limits constrain short-clip integrations, but full agentic systems scale to lengthy archives and deliver timestamped, root-cause reports. Levatas uses such agents with Skydio x10 devices to inspect electric infrastructure for customers like american electric power, and Eklipse applies VLM agents to produce gaming highlight reels up to ten times faster than legacy tools.

Developers can adopt multimodal models such as nvclip, NVIDIA Cosmos Reason and Nemotron Nano V2 and integrate VLMs via the event reviewer in the NVIDIA blueprint for video search and summarization on the NVIDIA Metropolis platform. The blueprint supports custom agentic workflows that combine VLMs, large language models and retrieval systems to enable richer video analytics, smarter operations and scalable process compliance.