How I Built a Fully Automated News Aggregator That Actually Works.

Everyone’s talking about AI like it’s a magic box. Ask a question, get an answer, call it a day. But if you want to build something that actually runs without breaking, scales without drama, and doesn’t cost more than your rent, you need more than prompts. You need architecture.

Here’s what I built.

I run a fully autonomous AI-driven news aggregator. Every day, it pulls in 150–175 articles from Google and various RSS feeds. It scrapes full pages (with fallback logic to Node.js and Puppeteer when things get weird), deduplicates them in two stages: fast local similarity filtering using Levenshtein in Node.js, followed by semantic deduplication using GPT-4o-mini to catch paraphrased overlap and fluff.

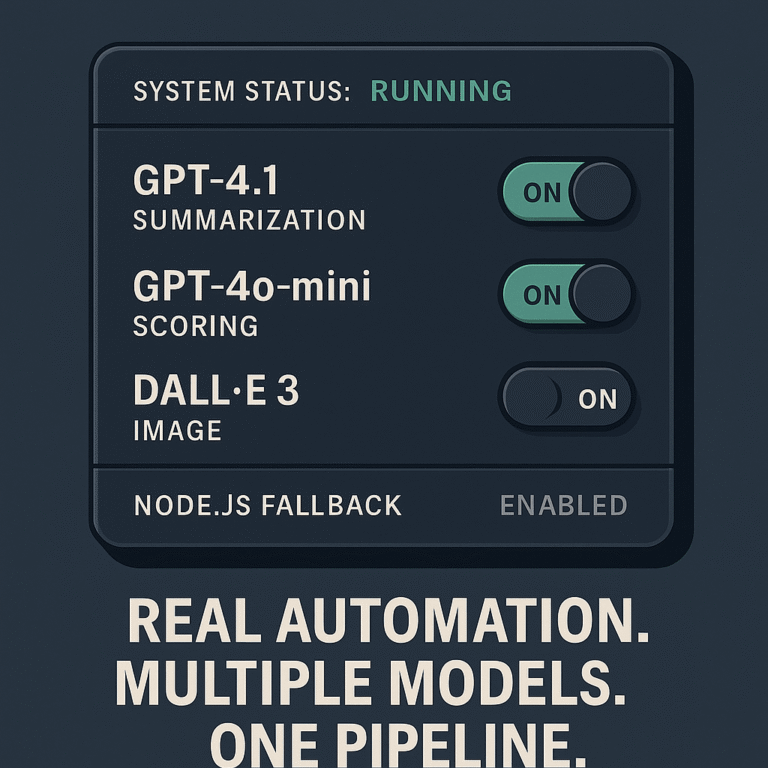

Each article is summarized using GPT-4.1, because that model gives the cleanest, most coherent extracts, even if it’s a bit pricier. Then, each summary is scored for importance using GPT-4o: cheaper, faster, and more than good enough for classification tasks.

Only articles above a certain threshold make the cut. Those get pushed to WordPress with a symbolic image generated by DALL·E 3, themed to the content. Every component writes to a central MariaDB database, which acts as the system’s source of truth. Each stage moves data forward in a controlled, restartable flow – with full fallback handling and zero need to rerun the whole thing when something fails.

This isn’t a bunch of prompts duct-taped together. It’s a layered, resilient automation system, and every layer uses the right tool for the job.

Not the cheapest model.

Not the most hyped model.

The one that fits the task.

Models used as of May 2025:

Summarization needs precision: Use GPT-4.1

Deduplication in AI: Use gpt-4o-mini

Scoring needs speed: Use 4o

Image needs visual flair: Use DALL·E 3

Scraping needs fallback: Use code, not hope.

That’s the difference between playing with AI and actually engineering it.

The whole thing costs around $20–25/month to run. It publishes 10–20 articles a day, entirely unsupervised. If something breaks, I can restart at any step with zero data loss.

So next time someone shows off their “AI pipeline,” ask them this:

- Are you using the right model, or just the cheapest one?

- Can your system recover from failure without a human?

- Is it a toy, or is it an actual layer in your stack?

AI isn’t magic. It’s tools, tuned for purpose, arranged with intent.