NVIDIA research has unveiled a groundbreaking development in the field of Transformer architecture with the introduction of nGPT, a normalized Transformer that leverages representation learning on a hypersphere. This architecture harnesses the full potential of geometric insights, providing dramatic improvements over traditional Transformer models by consolidating numerous research findings into a singular, efficient framework.

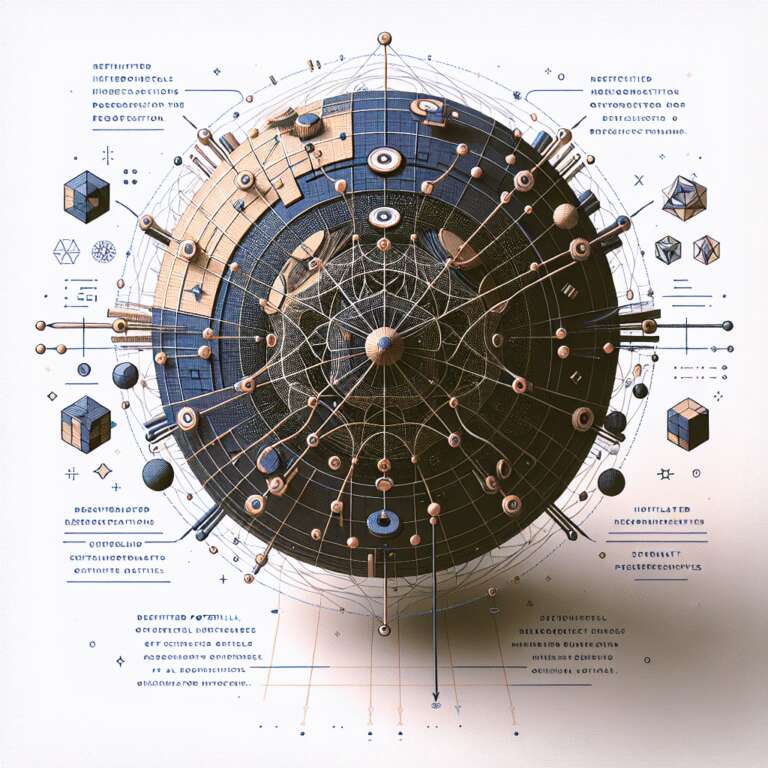

The key innovation of nGPT is its hypersphere-based normalization, which ensures that all embedding dimensions are standardized onto a unit hypersphere. This unique approach fosters consistent dimensionality and interprets matrix-vector multiplications as cosine similarities, thus eliminating the need for common practices like weight decay and enhancing training stability. Additionally, this framework introduces methods for mitigating non-linear constraints with adjustable scaling factors and employs variable-metric optimization to further refine the model’s performance.

Notably, nGPT achieves remarkable efficiency, reducing training steps necessary to attain equivalent model accuracy by a factor of up to 20. This efficiency comes from employing learnable eigen learning rates in gradient computations, making the model not only faster but also precise in its representations. Ultimately, this significant advancement in Transformer technology underscores NVIDIA’s continuing influence in Artificial Intelligence research, pushing the boundaries of what is possible in machine learning architectures.