Mamba is a cutting-edge Artificial Intelligence model that stands out as a significant alternative to the dominant Transformer models currently shaping the field. Developed as a variant of State Space Models (SSMs), Mamba addresses a central challenge faced by Transformers: the inefficiency when handling extremely long sequences. This model promises comparable performance to Transformers while being optimized for long sequence lengths, achieving what the authors describe as linear scaling in sequence length.

Unlike traditional Transformers that employ a quadratic complexity attention mechanism, Mamba leverages a more efficient approach which eliminates this bottleneck, allowing for faster processing — reportedly up to five times faster under certain conditions. The model also showcases its proficiency across various modalities, including language, audio, and genomics, often outperforming Transformers of equivalent size.

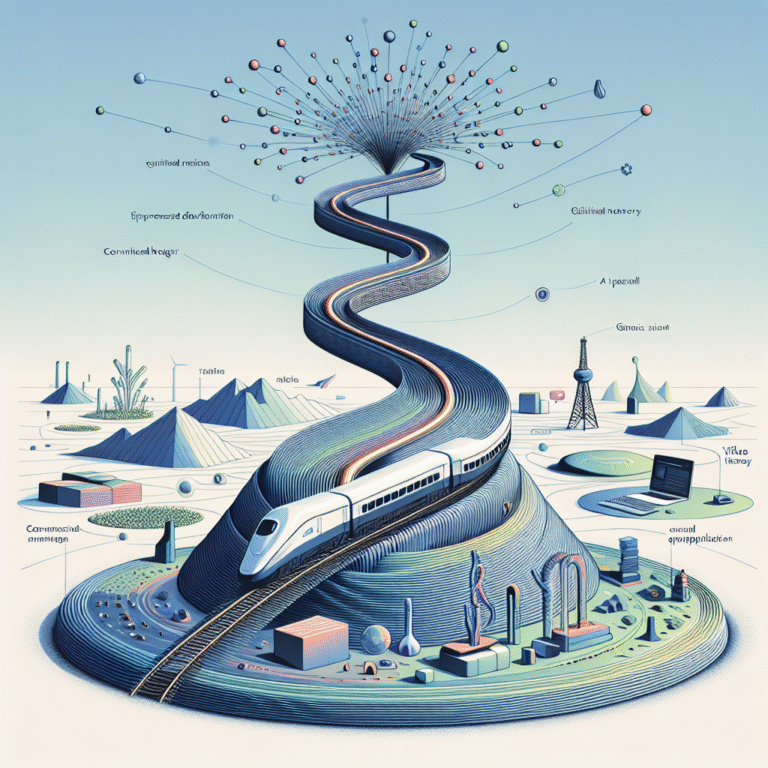

The architecture of Mamba introduces a selective mechanism that allows it to inherently compress and selectively propagate information through long sequences, making it highly efficient in scenarios where memory and processing power are paramount. This innovation not only enhances computational efficiency but could also pave the way for new applications in areas that require processing of extensive sequences, like genomics or video analysis.

The implications of Mamba’s architecture suggest a shift from the Transformer-centric approach to a more balanced ecosystem of models that can efficiently manage both short and long sequence tasks, potentially transforming how Artificial Intelligence is leveraged across disciplines.