A group revising company policies over the past six months found a common barrier to adoption: stigma. when a company with tens of thousands of software engineers introduced an Artificial intelligence-powered tool, uptake lagged well below 50% because colleagues perceived users as less skilled, even when output quality was identical. the author draws on research and an internal working group to show this problem is widespread and not primarily technical.

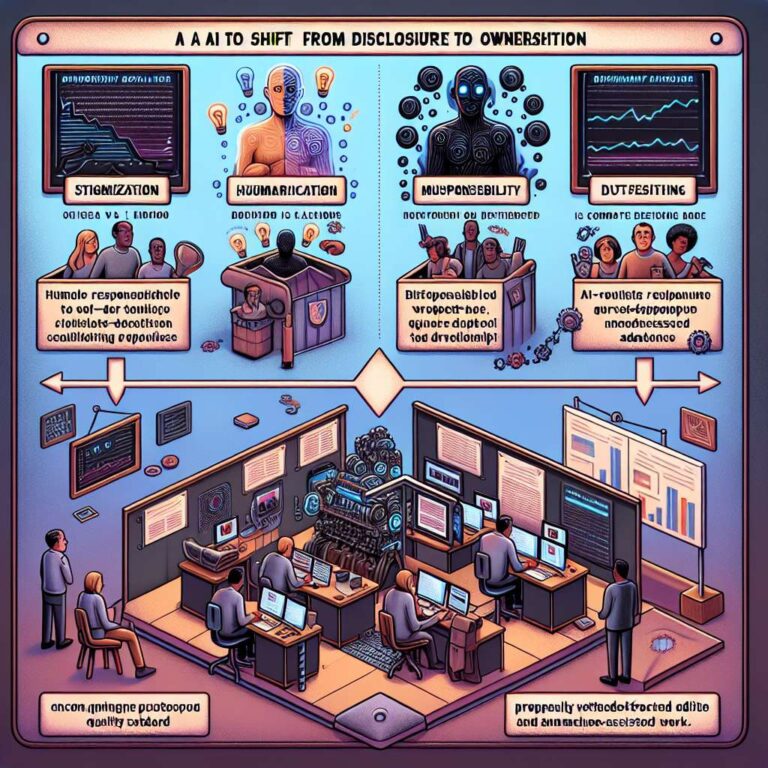

The article separates contexts where mistrust of generative tools is appropriate from business contexts where it is not. in education and some artistic settings, concerns about cheating or creative authenticity matter. in business, success is judged by results: accuracy, coherence, and effectiveness. yet public debates about disclosure have led many organizations to mandate that people label Artificial intelligence use. studies cited include a company experiment where reviewers downgraded work labeled as machine-assisted and a meta-analysis of 13 experiments that identified a consistent loss of trust when workers disclose their use. those disclosure mandates create a chilling effect and divert attention from output quality.

The proposed alternative is an ownership imperative: treat Artificial intelligence like any other powerful tool and insist that humans take full responsibility for outputs. mistakes, inaccuracies, or plagiarism remain the human user’s responsibility. the article gives a concrete failure example when a large consulting company submitted an error-ridden Artificial intelligence-generated report to the Australian government and suffered reputational damage. practical steps are offered: 1. replace disclosure requirements with ownership confirmation that a human stands behind content; 2. establish output-focused quality standards and verification workflows; 3. normalize use through success stories rather than punishment; 4. train employees for ownership with fact-checking and editing skills. companies that stop asking “Did you use Artificial intelligence?” and start asking “Is this excellent?” will be better positioned to capture value from the technology.