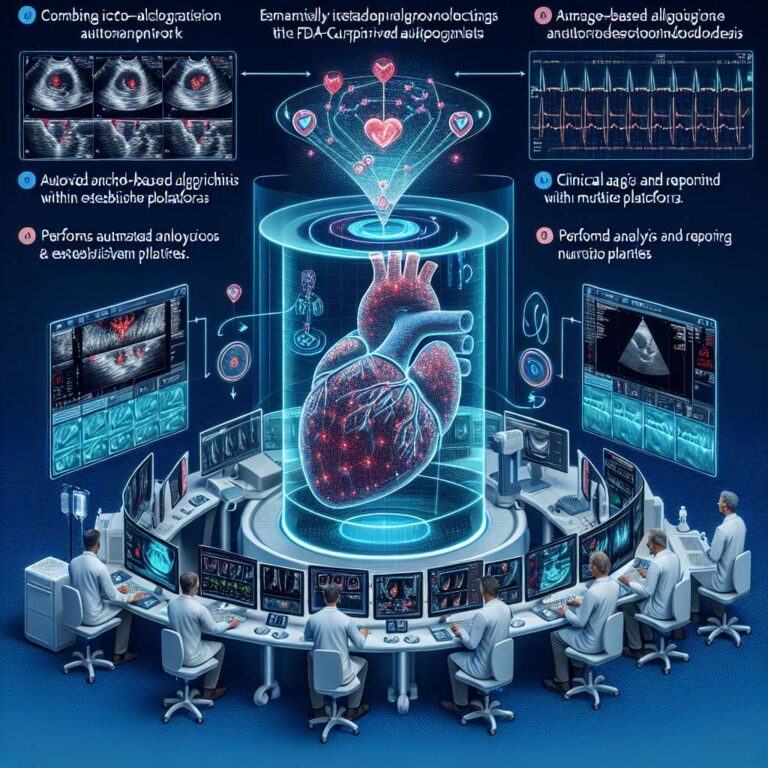

Us2.ai’s news feed documents a series of recent research presentations, partnerships, and certifications that advance the application of Artificial Intelligence in echocardiography. The company announced a partnership with Merge Cardio to integrate Us2.ai’s FDA-cleared algorithms into Merge’s imaging systems, enabling Artificial Intelligence-generated analysis and reporting of echocardiograms. Us2.ai also joined Harrison.ai’s medical imaging open platform, which offers a single-integration pathway to multiple vendors with a zero markup approach. Separately, Us2.ai completed ISO/IEC 27001:2022 certification.

Multiple studies presented at major cardiology conferences evaluated Us2.ai technology in clinical settings. At the American Heart Association scientific sessions 2025, researchers from Juntendo University presented AI-SCREEN-CA, which used Us2.ai’s automated echocardiography analysis to evaluate its ability to detect previously unrecognized cardiac amyloidosis in an unselected clinical population. At ASE 2025, a multicenter external validation study assessed Us2.ai’s fully automated pattern recognition echocardiography approach and found it could accurately identify cardiac amyloidosis using a single apical four-chamber view. Other ASE 2025 reports described Artificial Intelligence models under development for hypertrophic cardiomyopathy detection, aortic stenosis detection and classification, and the feasibility of a handheld echocardiography device equipped with Us2.ai software for preoperative assessment.

The company also highlighted multimodal work presented at ESC Congress 2025 that combines image-based pattern recognition with clinical and laboratory data to improve diagnostic accuracy for cardiac amyloidosis. In that study, Us2.ai’s pattern recognition model applied to a single apical four-chamber view (referred to as Artificial Intelligence-PRM in the study) was integrated with a clinical and laboratory data model (Artificial Intelligence-ECM in the study) to overcome limitations of single-modality approaches. Additional community-focused work presented at ASE described a point-of-care screening pathway using Artificial Intelligence-assisted interpretation and a 14-image protocol aimed at improving access to structural heart disease care in American Indian communities.