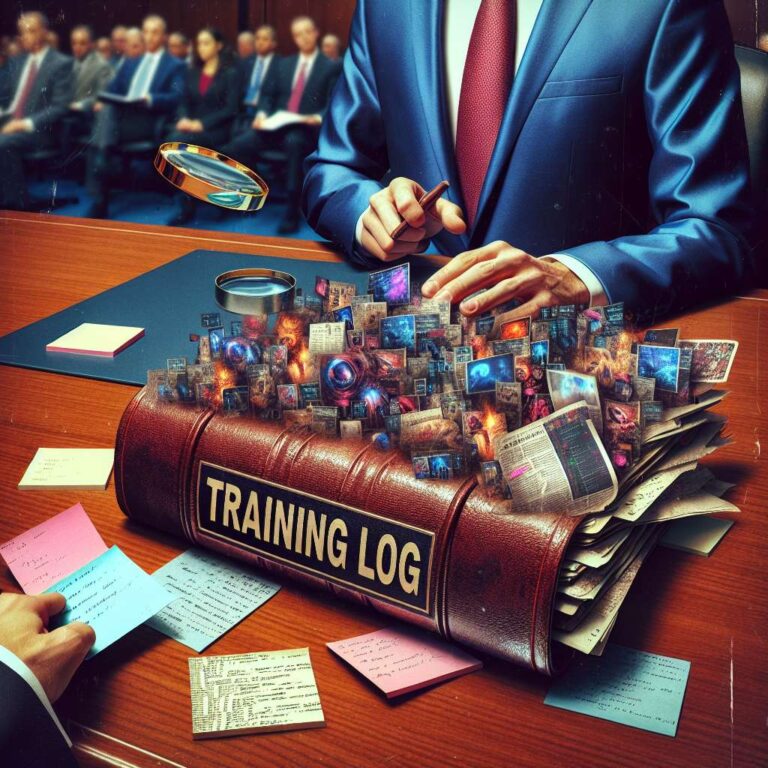

Representatives Madeleine Dean, a Democrat from Pennsylvania, and Nathaniel Moran, a Republican from Texas, introduced a bipartisan bill in the US House of Representatives called the Transparency and Responsibility for Artificial Intelligence Networks (TRAIN) Act. The measure targets transparency in generative artificial intelligence training practices, signaling heightened legislative attention to how foundation models and related systems are created and maintained. By focusing specifically on training, the proposal addresses a critical phase in the development of generative artificial intelligence tools where data selection, labeling and governance decisions can have far reaching consequences.

The legislative objective of the TRAIN Act is to promote clearer disclosure and accountability around the methods and data used to train generative artificial intelligence models. Although detailed provisions are not visible in the available text, the focus on transparency and responsibility indicates that policymakers are scrutinizing issues such as the provenance of training data, the potential inclusion of copyrighted or sensitive information, and the ways in which system developers document and explain their training pipelines. The bipartisan sponsorship highlights that concern over generative artificial intelligence training practices cuts across party lines and is emerging as a shared priority in technology policy.

By introducing the TRAIN Act in the House of Representatives, lawmakers are positioning transparency in generative artificial intelligence training as a core element of emerging regulatory frameworks for advanced computational systems. The proposal underscores expectations that organizations developing generative artificial intelligence will provide more information about their training processes to regulators, business customers and potentially the public. It also suggests that future compliance obligations for artificial intelligence developers may extend beyond model outputs to include how models are built, trained and updated over time, reflecting a broader shift toward lifecycle oversight of artificial intelligence technologies.