The Guardian-AWS article on agentic artificial intelligence presented autonomy as the next frontier, promising automated workflows, reduced human error, and sector efficiencies. It proposed a familiar triad of mitigations: security-first design with zero-trust architectures and real-time monitoring, human oversight through human-in-the-loop and human-on-the-loop frameworks, and transparency-by-design that explains limitations, data pathways, and decision boundaries. The piece framed good governance as a competitive advantage for organisations that balance innovation with responsibility.

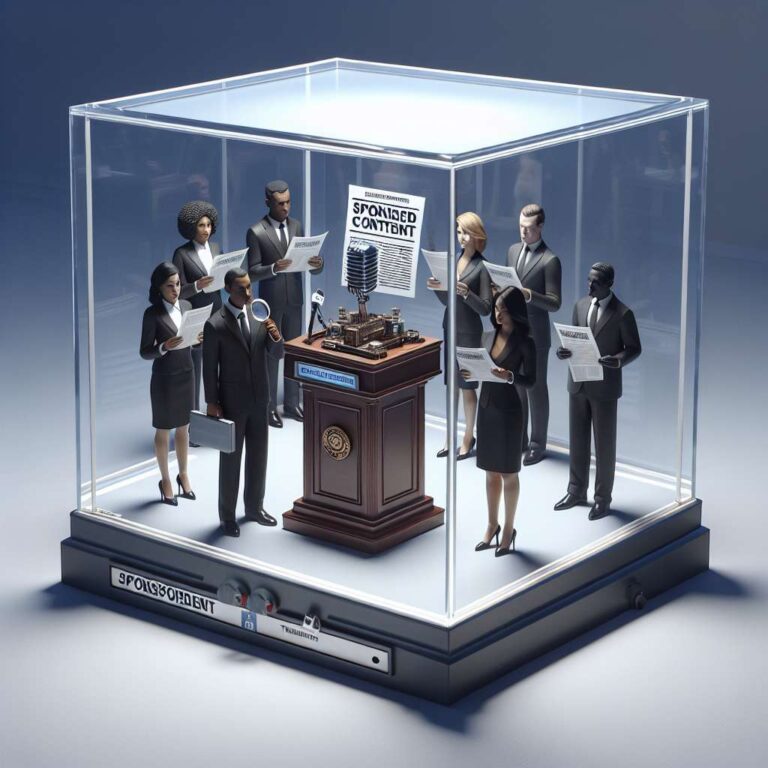

What followed was a rapid and hostile public reaction. Readers, encountering branded content paid for by a major cloud provider, labelled the piece advertorial, propaganda, or even “gaslighting.” The response exposed a credibility gap: when the same corporations that develop and profit from artificial intelligence also fund narratives about its safety, assurances are read as image management rather than independent accountability. Critics also challenged the language of “agentic” artificial intelligence, arguing it misleadingly implies intention; the article notes that today’s large language models (LLMs) and automated systems operate within pre-defined scaffolds, and the illusion of autonomy undermines trust when overstated.

The controversy surfaces practical lessons for regulators and practitioners, especially in health, social care, and education. First, governance must be independent rather than performative, with external scrutiny, ethical oversight, and regulatory alignment. Second, transparency must include candid limits, failure modes, and who remains accountable when systems err. Third, human-centred design is non-negotiable: meaningful human oversight requires trained people with authority to review and reverse decisions. The blog draws a parallel with CQC-style accountability and highlights ComplyPlus as an example of embedding traceable audit trails and human verification in compliance systems.

The takeaway is clear. Trust in artificial intelligence will not be secured through sponsored narratives. It must be rebuilt through demonstrable, participatory, and accountable governance that aligns technology with organisational values and lived experience. Until organisations confront that contradiction honestly, public scepticism will persist and artificial intelligence will be seen as a system of control rather than a tool for improving services.