The National Cyber Security Centre (NCSC) has warned that rising Artificial Intelligence prompt injection attacks represent a new, stealthy class of manipulation that targets large language models (LLMs). These attacks use ordinary-looking text to influence model outputs and can bypass assumptions that separate code from data in traditional systems. The warning signals that security teams must rethink architectures, controls and monitoring when LLMs are part of critical systems.

A prompt injection occurs when untrusted user input is combined with developer-provided instructions in a large language model (LLM) prompt. It allows attackers to embed hidden commands within normal content and manipulate the model’s behavior. Unlike traditional vulnerabilities, where code and data are clearly separated, LLMs process all text as part of the same sequence, which makes every input potentially influential and creates unique security challenges. The NCSC explained the distinction concisely: “Under the hood of an LLM, there’s no distinction made between ‘data’ or ‘instructions’; there is only ever ‘next token’. When you provide an LLM prompt, it doesn’t understand the text it in the way a person does. It is simply predicting the most likely next token from the text so far. As there is no inherent distinction between ‘data’ and ‘instruction’, it’s very possible that prompt injection attacks may never be totally mitigated in the way that SQL injection attacks can be.”

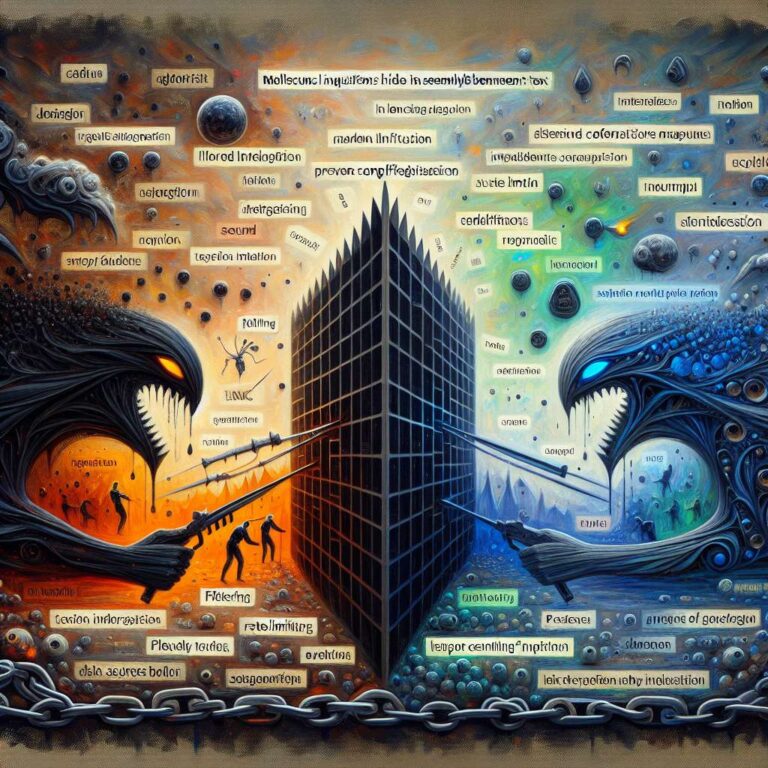

Prompt injection differs from classical injection attacks such as SQL injection because LLMs treat all text uniformly, so attackers can hide harmful instructions inside seemingly benign content. That capability can lead models to produce misleading or dangerous outputs, undermining data integrity, system security and user trust. As organizations adopt more Artificial Intelligence tools, understanding these new vectors has become a top priority for defenders.

The article outlines practical mitigation strategies. Organizations should design secure architectures that separate trusted instructions from untrusted inputs, filter or restrict user-generated content, and embed inputs within clearly tagged or bounded prompt segments. Ongoing monitoring, logging of model inputs and outputs, tracking of external API calls and model-triggered actions, proactive red teaming and formal security reviews at each stage of development are recommended to detect anomalies early and reduce risk in enterprise environments.