The article explores practical uses of Model Context Protocol (MCP) servers from the perspective of a technical founder behind Sliplane, a Docker hosting platform. While many MCP server demos focus on automating routine tasks like sending WhatsApp messages, the real value for the author lies in leveraging these servers for productivity, rapid support, and streamlined content creation—all without leaving the terminal.

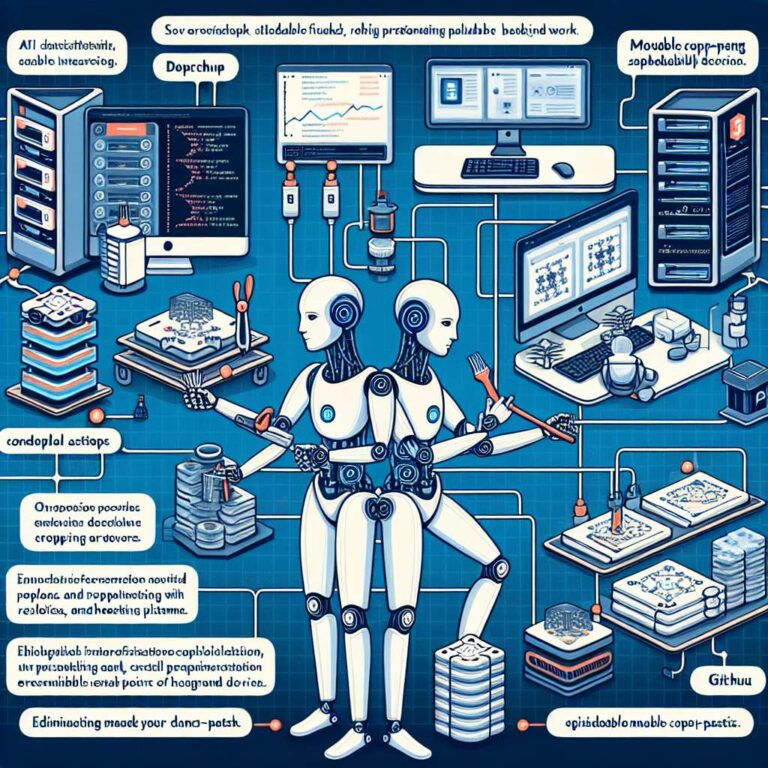

MCP, developed as an open protocol by Anthropic, allows Artificial Intelligence assistants like Claude to interact directly with APIs, external tools, and data sources. Rather than copying information around manually, Claude—when connected to MCP servers—can query Docker Hub documentation, scan GitHub issues, deploy containers, and even draft blog posts. The author relies on four key MCP server integrations: Docker Hub for retrieving image documentation and troubleshooting environment variables; GitHub for digging into source code, open issues, and undocumented flags; a Sliplane integration to automate deployment debugging, log analysis, and iterative testing; and a Dev.to server to quickly generate blog drafts from Markdown. Each tool addresses a real workflow bottleneck, from accelerating customer support to turning solved issues into growth-driving tutorials.

The core benefit of utilizing MCP servers, the founder argues, isn´t raw speed—manual debugging may sometimes be quicker. Instead, it´s the background automation and delegation: specifying problems using voice input, handing routine diagnosis to Claude, and returning only when progress requires intervention. This parallelization acts as a tireless junior developer, freeing the founder to focus on higher-value tasks. Beyond hype or flashy features, the article makes a compelling case for MCP servers as essential productivity infrastructure for startup teams, empowering technical leaders to efficiently serve users, maintain infrastructure, and grow their brands with minimal friction.