Researchers Sen Zhang, Xiaoxiao He and colleagues at Rutgers University, together with Chaowei Tan from Qualcomm, present a new framework for translating three-dimensional American Sign Language. The work moves beyond traditional two-dimensional video analysis by directly using 3D skeletal and hand motion data to capture the spatial and gestural complexity of signing. The team calls the approach the Large Sign Language Model, and demonstrates both direct translation to text and an instruction-guided translation mode to control outputs.

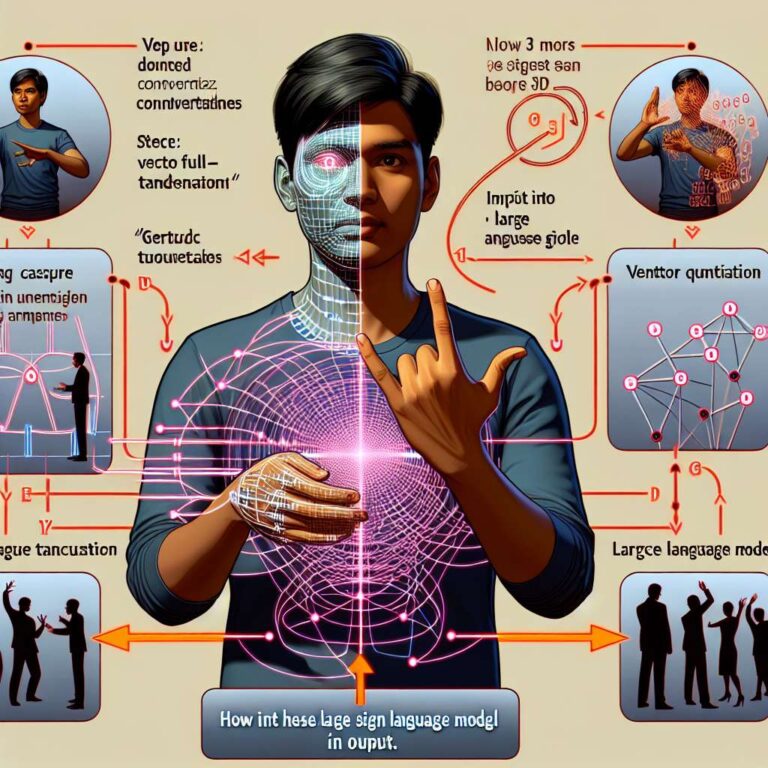

Technically, the system encodes continuous 3D motion using a vector quantized variational autoencoder to convert gestures into a discrete token sequence suitable for input to a pretrained large language model. The motion representation relies on recent 3D sign language datasets and SMPL-X human body representations, which model poses with 52 joints and include downsampled gesture features. Multilayer perceptrons project the quantized gesture tokens into the embedding space of the language model so the model can learn correspondences between motion and text.

The training pipeline is staged: first the sign language tokenizer is trained, then modality-alignment pretraining aligns gesture tokens with textual representations, and finally instruction fine-tuning improves the model’s ability to follow prompts and produce accurate English translations. The researchers used the large-scale SignAvatar dataset, which includes multiple sign languages such as American Sign Language and German Sign Language, to pretrain and evaluate the alignment between visual and linguistic modalities.

Results reported by the team indicate that integrating 3D gesture features with a language model improves translation accuracy and robustness compared with approaches restricted to 2D video. The work highlights instruction-guided translation as a flexible mechanism for controlling outputs and suggests future directions of expanding 3D sign datasets and adopting linguistically aware learning strategies to further enhance accessibility for deaf and hard-of-hearing communities.