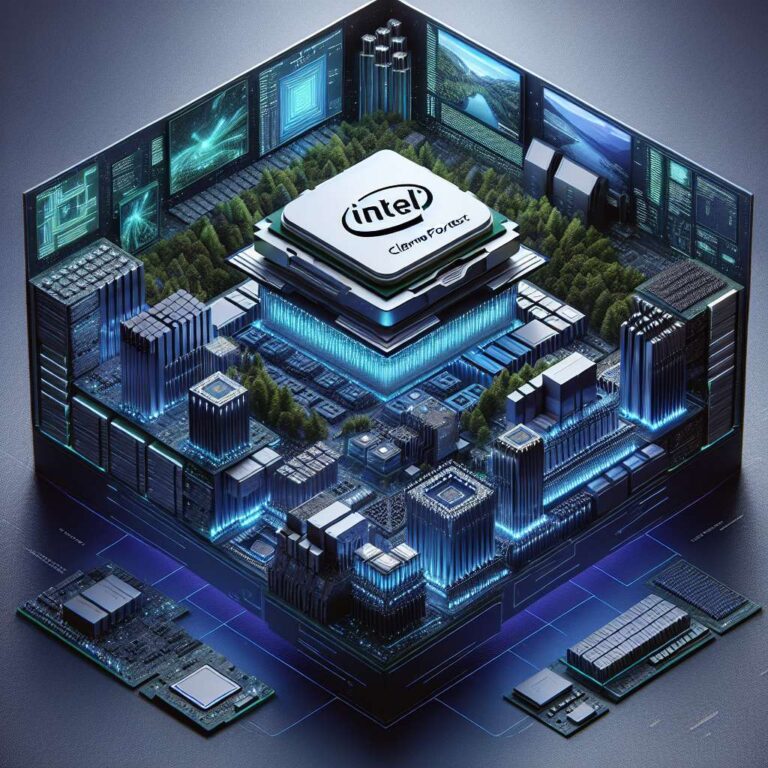

Intel used Hot Chips to present Clearwater Forest, its next-generation E-core Xeon, positioning the design as a showcase for its foundry and packaging capabilities. The chip replaces Sierra Forest and is built around the new Darkmont efficiency cores. Intel said Clearwater Forest will ship in a large chiplet package that uses the 18A node for compute dies and relies on Foveros Direct 3D stacking combined with EMIB for advanced multi-die integration.

At the core level, Darkmont emphasizes a wider and more parallel front end and a strengthened out-of-order engine. Intel disclosed a 64 KB instruction cache per core and described an expanded decoder that can handle more instructions per cycle than the previous Crestmont E-core design. The company also detailed enhancements to reorder and allocation structures, an increase in allocation units, and an enlarged out-of-order window to support more in-flight work.

Execution resources were significantly increased, with many more execution ports for integer and vector math to sustain much higher parallel throughput. Intel framed the combination of architectural upgrades, the 18A process, and advanced 3D Foveros packaging paired with EMIB as a demonstration of what its foundry and packaging teams can now deliver. Specific shipping timelines, power or performance numbers, and final system-level configurations were not stated.