Demis Hassabis, CEO of Google DeepMind, called a high-profile artificial intelligence brag “embarrassing” after Sébastien Bubeck, a research scientist at OpenAI, claimed that two mathematicians had used OpenAI’s latest large language model, GPT-5, to find solutions to 10 unsolved problems in mathematics. The problems were presented as Erdős problems, part of a vast collection of puzzles left by 20th-century mathematician Paul Erdős and tracked by Thomas Bloom at the University of Manchester on erdosproblems.com, which lists more than 1,100 problems and notes that around 430 of them come with solutions. Bloom quickly responded on X that Bubeck’s description was “a dramatic misrepresentation,” explaining that a problem is not necessarily unsolved just because his website does not list a solution, since there are millions of mathematics papers and he has not read them all, but GPT-5 probably has.

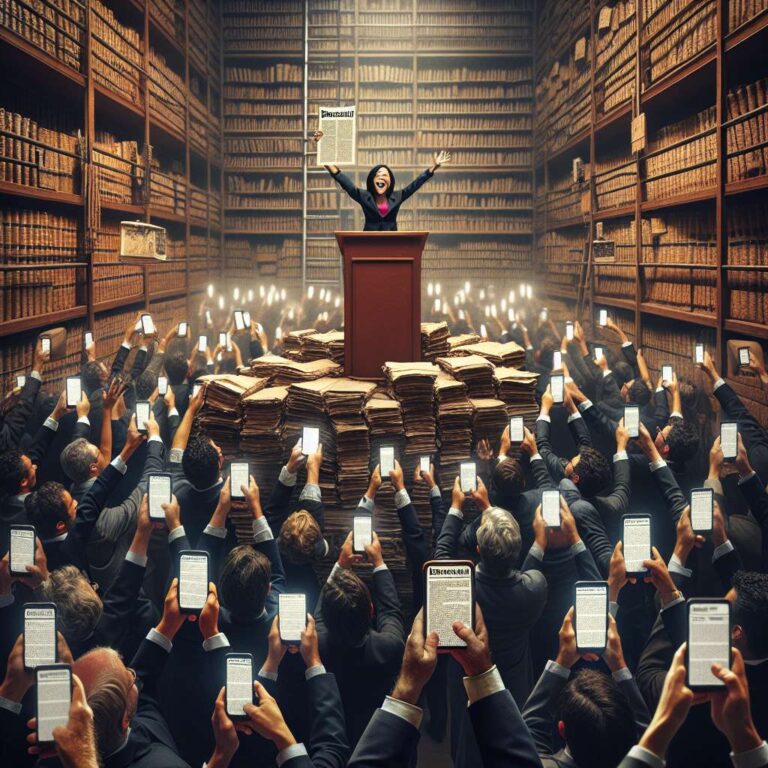

It later emerged that GPT-5 had not discovered new solutions to 10 unsolved Erdős problems but had instead located 10 existing solutions available online that Bloom had not previously seen. The episode illustrates two main points: first, that breathless claims about artificial intelligence breakthroughs announced on social media can race ahead of reality, and second, that GPT-5’s ability to perform large-scale literature search and surface overlooked work is impressive in its own right, even if less glamorous than discovery. François Charton, a research scientist at artificial intelligence startup Axiom Math, said mathematicians are indeed interested in using large language models to trawl through vast numbers of existing results, but he noted that social media prefers narratives of “genuine discovery” over incremental tools. Similar hype flared around claims that GPT-5 had solved Yu Tsumura’s 554th Problem after earlier work showed that no large language model could; Charton countered that this particular puzzle is something one might set for undergraduates and that “there is this tendency to overdo everything.”

More measured evaluations of large language models are emerging in fields that have been heavily promoted by model makers, such as medicine and law. Researchers found that large language models could make certain medical diagnoses, but they were flawed at recommending treatments, and they also found that large language models often give inconsistent and incorrect legal advice, concluding that “evidence thus far spectacularly fails to meet the burden of proof.” Yet such sober conclusions struggle for traction on X, where major artificial intelligence figures like Sam Altman, Yann LeCun, and Gary Marcus argue in public and “huge claims” gain more attention. The article notes that Bubeck’s mistake was visible only because it was caught, warning that unchecked hype will persist as researchers, investors, and enthusiasts keep amplifying one another. A late-breaking coda describes how Axiom’s own model, AxiomProver, was reported to have solved two open Erdős problems (#124 and #481) and later nine out of 12 problems in this year’s Putnam competition, drawing praise from figures like Jeff Dean and Thomas Wolf and reigniting debates over whether such contests measure knowledge or creative problem-solving. The piece closes by arguing that flashy competition wins should be only the beginning of a much deeper examination of what large language models are actually doing when they solve difficult, human-rated math problems, and that social media is a poor venue for judging their true capabilities.