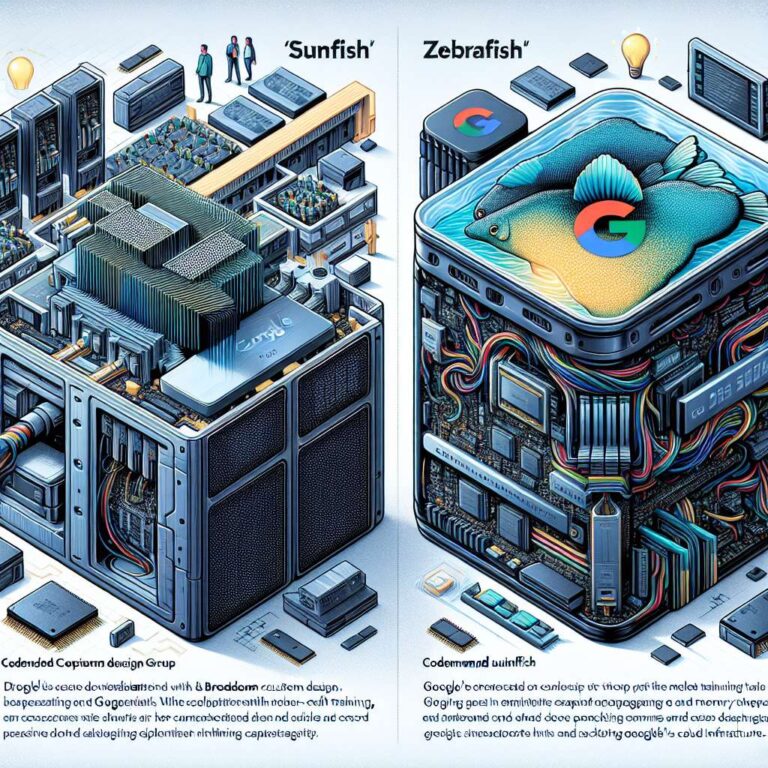

Google is expanding its custom Artificial Intelligence infrastructure with two new tensor processing unit variants targeting distinct workloads. The eighth generation lineup introduces TPUv8ax, codenamed ‘Sunfish’, which is oriented toward training large Artificial Intelligence models such as Gemini, and TPUv8x, codenamed ‘Zebrafish’, which is tailored for large scale inference deployments. The new designs are intended to strengthen Google’s internal cloud hardware stack as external interest in its custom accelerators grows.

For the training focused TPUv8ax ‘Sunfish’, Google has partnered with Broadcom and its custom design group, which is responsible for end to end design, memory integration, supporting hardware, and packaging. Broadcom delivers a finished TPU product that can be dropped into Google’s existing server infrastructure, reducing integration friction for new training capacity. This arrangement keeps Broadcom deeply involved in the most complex parts of the chip development process while allowing Google to standardize deployment across its data centers.

The inference oriented TPUv8x ‘Zebrafish’ uses a different collaboration model that shifts more responsibility to Google. For ‘Zebrafish’, Google has brought in MediaTek in a limited role, while Google sources wafers and memory directly from suppliers and uses MediaTek mainly for supporting chips and packaging, where Google has less experience. This approach means a larger share of chip design work now occurs in house, which reduces dependence on external partners but still acknowledges gaps in Google’s full stack chip design capabilities. Specific performance and memory figures for TPUv8ax and TPUv8x have not been disclosed, but expectations are that they will exceed the TPUv7 ‘Ironwood’, which carries 4,614 TeraFLOPS at FP8 precision and 192 GB of HBM memory.