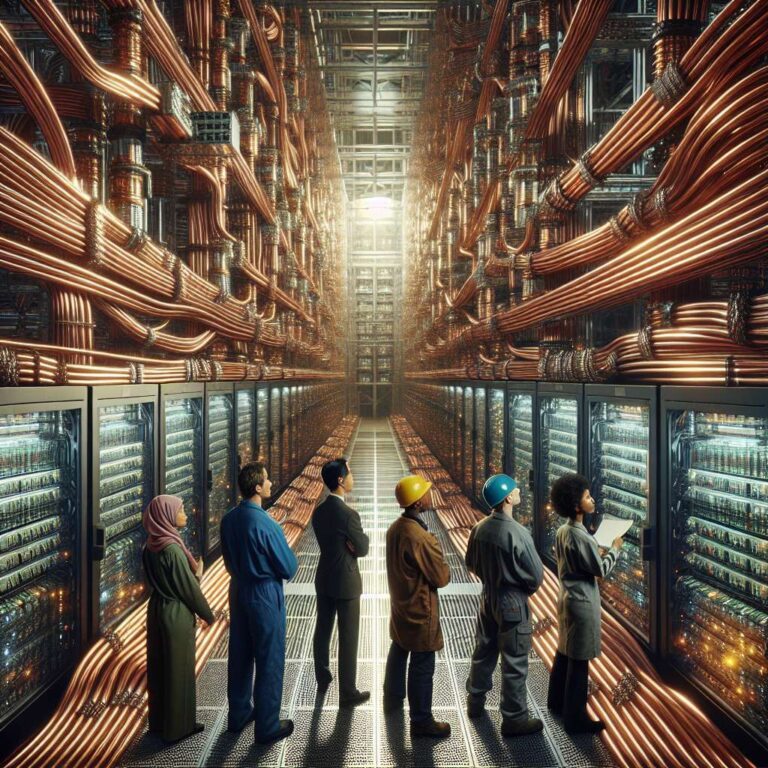

Artificial intelligence factories are massive new data centers built to train and deploy models rather than to serve web pages. The article describes these facilities as GPU‑dense engines stitched together from tens to hundreds of thousands of GPUs, with heavy custom hardware such as liquid‑cooled manifolds, custom busbars and multi‑hundred‑pound copper spines. NVIDIA highlights NVLink domains that can deliver 130 TB/s of GPU‑to‑GPU bandwidth and a NVLink spine constructed from over 5,000 coaxial cables as examples of the scale and physical complexity inside a rack.

Training modern large language models requires distributed computing across many nodes, with collective operations such as all‑reduce and all‑to‑all sensitive to latency and bandwidth. The article argues that traditional Ethernet tolerances for jitter and inconsistent delivery make it a bottleneck for such workloads. It positions InfiniBand as the gold standard for predictable, zero‑jitter operation and describes NVIDIA Quantum InfiniBand features including scalable hierarchical aggregation and reduction protocol technology, adaptive routing and telemetry‑based congestion control. For larger clusters, NVIDIA Quantum‑X800 InfiniBand switches offer 144 ports of 800 Gb/s, hardware‑based SHARPv4 and integrated co‑packaged silicon photonics, while ConnectX‑8 SuperNICs deliver 800 Gb/s per GPU. The article notes that NVIDIA Quantum infrastructure connects the majority of TOP500 supercomputers and reports 35% growth in two years.

To bridge enterprise Ethernet investments, the article describes Spectrum‑X as a purpose‑built Ethernet that brings lossless networking, adaptive routing and performance isolation to distributed artificial intelligence. Spectrum‑X supports standards and open stacks such as SONiC and uses NVIDIA SuperNICs based on BlueField‑3 or ConnectX‑8 to provide up to 800 Gb/s RoCE connectivity. For the next leap in scale, silicon photonics and co‑packaged optics are presented as necessary to reach million‑GPU, gigawatt‑class facilities, with switches offering 128 to 512 ports of 800 Gb/s and total bandwidths of 100 Tb/s to 400 Tb/s, along with claimed power and resiliency benefits. The piece concludes that open standards matter but that tight, end‑to‑end optimization across GPUs, NICs, switches, cables and software is required to realize these large artificial intelligence factories.