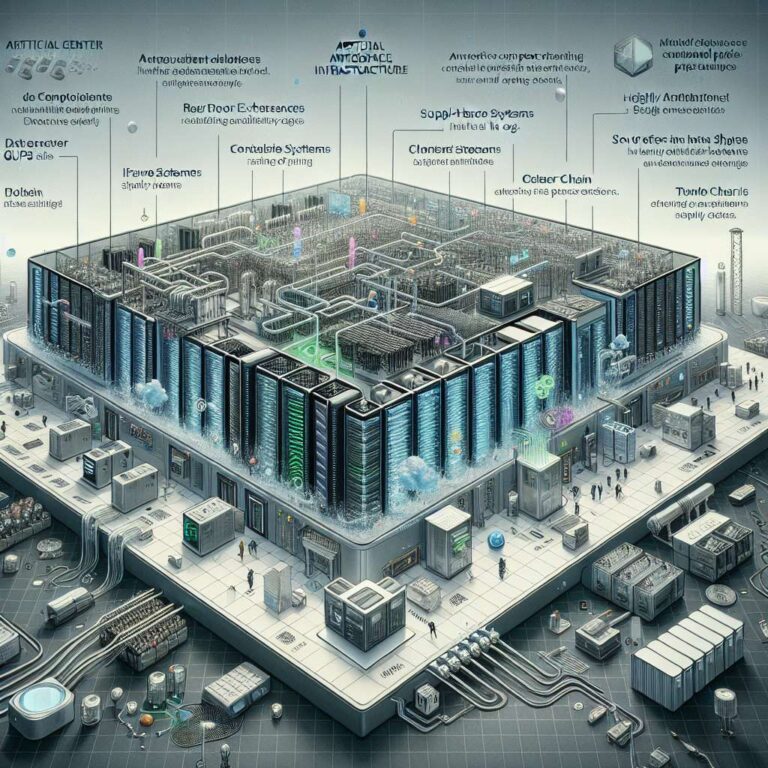

Artificial Intelligence infrastructure is evolving rapidly as data center operators, enterprises and governments respond to escalating compute demands, regulatory pressures and shifting economics. New greenfield facilities focused on Artificial Intelligence workloads are being designed around liquid cooling to handle the power density of modern GPUs, while many existing sites are turning to hybrid approaches that combine air and liquid systems. In fact, as early as April 2024, surveys already pointed to 22 percent of data centers using liquid cooling. Rear door heat exchangers, sidecar systems and advanced two phase cooling fluids are emerging as transitional options for operators that cannot yet support fully liquid environments.

Complex regulatory frameworks are reshaping where and how Artificial Intelligence infrastructure is deployed, particularly for enterprise and Fortune 1000 organizations. Privacy regulations in both the EU, UK, and many US states like California are restricting all-cloud strategies for highly regulated industries, driving on premises and colocation deployments for sensitive workloads such as legal services. European laws on Artificial Intelligence are defining prohibited use cases, while in the US, privacy rules increasingly intersect with Artificial Intelligence applications and data retention. At the same time, the cost of running large scale compute and storage in hyperscale clouds can become very high, pushing organizations to weigh compliance, economics and content control when choosing between cloud and on premises. Building or accessing deep regulatory expertise is becoming essential to avoid missteps and design compliant, cost effective Artificial Intelligence architectures.

Supply chain constraints are another defining feature of the current Artificial Intelligence build out, affecting key components across the stack. DRAM prices have increased 171 percent year-over-year according to CTEE and are now outpacing the rise in the price of gold, while NAND flash for SSDs, GPUs and processors are also experiencing shortages and extended lead times as demand continues at a fever pitch. Large OEMs with long term contracts can shield customers from some volatility, making strategic partnerships and early component commitments a critical tactic for data center projects. At the same time, workload placement strategies are being refined through a “horses for courses” model, where simpler, latency sensitive inference runs at the edge and more intensive models sit in centralized data centers. In the quick serve restaurant segment, for example, Artificial Intelligence agents that translate drive through orders into kitchen tickets can be processed locally with relatively light compute to minimize latency. Governments are also advancing sovereign Artificial Intelligence initiatives to control infrastructure, content and language models within national borders, which is increasing demand for localized generative models, regulatory navigation skills and export control expertise. Operators and vendors that cultivate these capabilities or partner for them are better positioned to serve sovereign data center projects and manage the fast changing Artificial Intelligence landscape.