Ian Leslie argues that research in artificial intelligence and cognitive psychology increasingly informs each other, yielding useful concepts for both fields. Drawing on Dwarkesh Patel’s interview with Andrej Karpathy, a co-founder of OpenAI who has since departed, and the book Algorithms To Live By by Brian Christian and Tom Griffiths, he frames five shared failure modes between machines and people. He then develops two in detail: model collapse and overfitting.

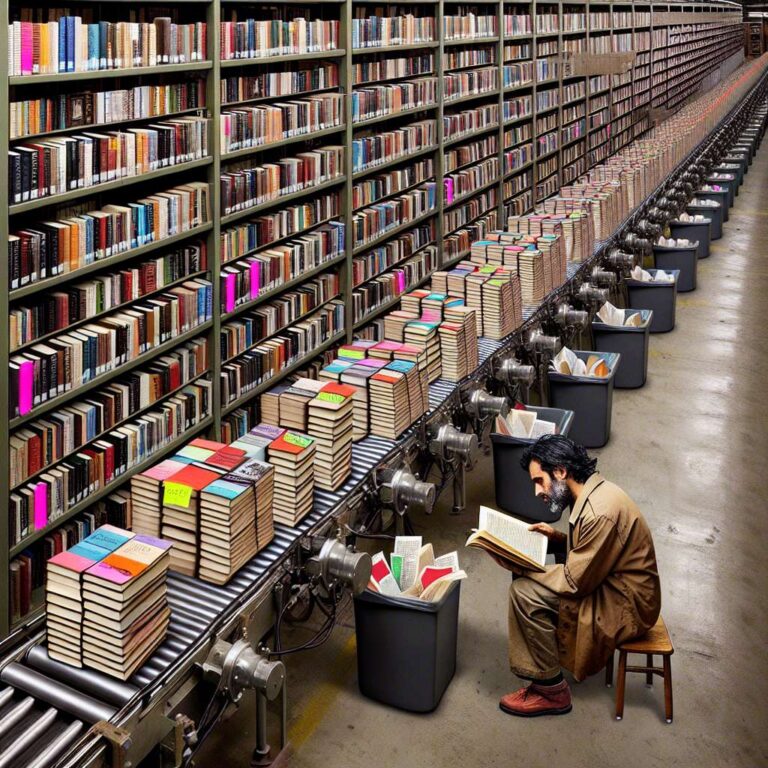

Model collapse, he writes, arises when models trained on rich human data begin learning from model-generated data as the internet fills with synthetic text and images. Because synthetic outputs are more predictable and less diverse, each generation amplifies prior biases and errors while shedding nuance, creativity, and signal. The feedback loop produces a generic, repetitive monoculture. Leslie notes the human analogue: over time people overfit to their own internal models, become rigid in thought, and rely on the same small set of friends and information sources. He extends the diagnosis to culture, citing pop music that chases streaming algorithms, formulaic Hollywood scripts, and thin imitations in contemporary visual art, describing postmodernism as a kind of cultural model collapse. Mitigations include raising quality control on training data, filtering out artificial intelligence generated content, and privileging human, rare, and anomalous data while still removing clear nonsense such as QAnon-style conspiracy theories. He points to OpenAI hiring domain experts to create exclusive high-quality content. For individuals, he recommends actively curating an information diet, reading great books, seeking novelty, and finding knowledgeable contrarian voices outside familiar circles.

Overfitting, in machine learning, occurs when a model memorizes its training set rather than learning generalizable patterns. It excels on familiar examples but fails on new inputs. Engineers counter it by penalizing reliance on specific patterns or stopping training before the model becomes too tuned to a particular dataset. Leslie sees a parallel in everyday life: routines provide stability but can narrow perception and make unfamiliar situations hard to interpret, leading either to misplaced confidence outside one’s domain or to fear that shrinks one’s world. He suggests periodically breaking habits to discover better approaches, citing a study in which a Tube strike forced commuters to find more efficient routes. He highlights neuroscientist Erik Hoel’s theory that dreams function as an injection of noise that disrupts rigid neural patterns, remixing mundane memories into bizarre forms to preserve flexibility, like the Fool in King Lear keeping sense clear by inverting it.