The article examines TabPFN, a transformer-based foundation model designed specifically for tabular data, and traces its evolution from the original release to TabPFN-2 and the more recent TabPFN-2.5. Early versions were constrained to up to 1,000 training samples and 100 purely numerical features, which limited their real world applicability. TabPFN-2.5 can now handle close to 100,000 data points and around 2,000 features, making it more practical for production-like prediction tasks while maintaining a familiar scikit-learn style interface and requiring minimal preprocessing for mixed feature types, missing values, and outliers.

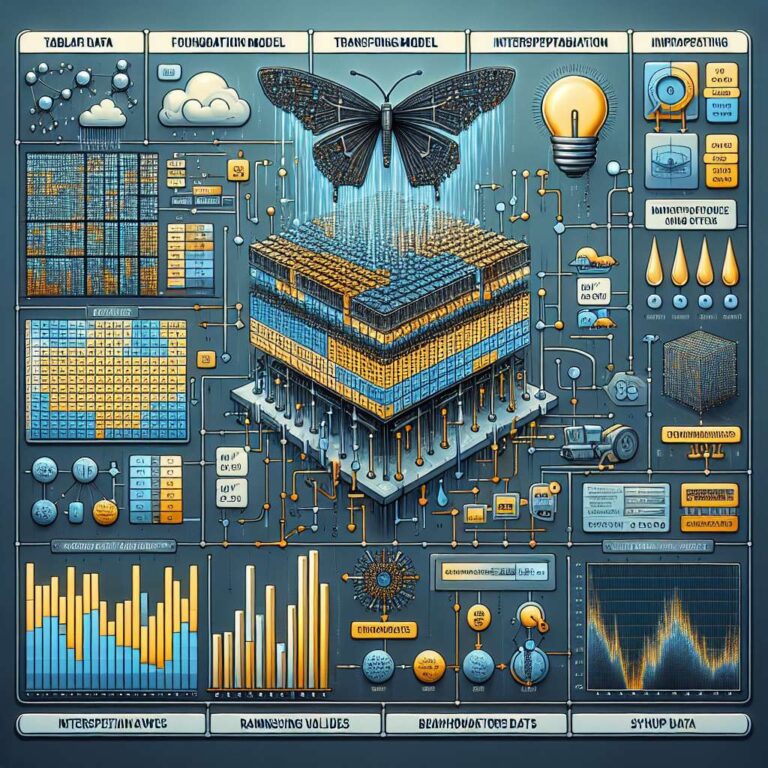

The core motivation behind TabPFN is to bring the foundation model paradigm that transformed text and image modeling to tabular data, where traditional workflows usually train a new model for every new dataset and reuse is limited. Instead of optimizing a model for a single dataset, TabPFN is trained on a prior over many synthetic tabular datasets so that it can perform zero-shot inference on new tasks through a single forward pass without retraining. The training pipeline relies on in-context learning, treating an entire dataset as a token, and uses a highly parametric structural causal model to generate diverse synthetic datasets; TabPFN 2 was trained on 130 million datasets, which encourages the model to learn general patterns across varied tabular problems.

Architecturally, TabPFN adapts the transformer to tabular structure with a two-stage attention mechanism that first captures relationships between features within a row and then models how each feature behaves across rows, making it insensitive to row and column order and able to scale to larger tables. In practice, users can install TabPFN as a Python package or use an API client, and the article walks through a Kaggle notebook implementation where a TabPFN-2.5 classifier is compared against a vanilla XGBoost model on a binary rainfall prediction task. Using a standard train and validation split and evaluating with ROC-AUC, the TabPFN classifier achieves ROC AUC: 0.8722, while the untuned XGBoost baseline records ROC AUC: 0.8515, placing the TabPFN-based solution at a 22nd rank on the public leaderboard and illustrating its strong out-of-the-box performance.

The author also addresses interpretability, noting that transformer models are not inherently transparent and that post-hoc tools are needed to understand predictions. TabPFN offers an Interpretability Extension that integrates with SHAP, enabling users to compute SHAP values and visualize feature importance through global importance plots and beeswarm summaries. In the rainfall experiment, SHAP analysis indicates that cloud cover, sunshine, humidity, and dew point contribute most strongly to predictions, while wind direction, pressure, and some temperature variables are less influential, with the caveat that SHAP reveals learned associations rather than causal relationships. The article concludes by highlighting additional capabilities such as time series forecasting, anomaly detection, synthetic data generation, and embedding extraction as promising areas for future exploration, along with potential domain-specific fine-tuning.