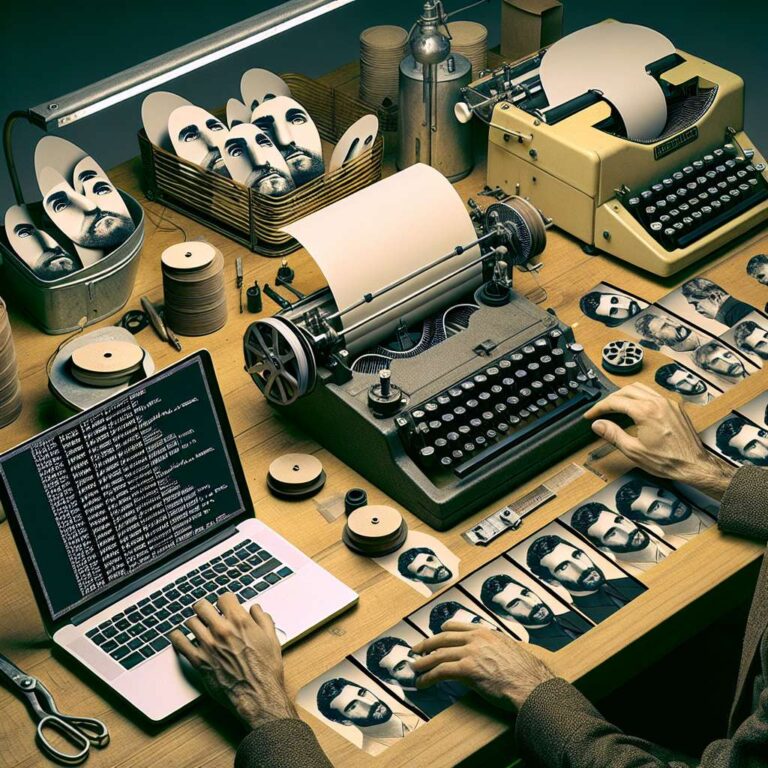

Developers aiming to build scalable systems for faceless user-generated content are leveraging the power of ffmpeg in tandem with several artificial intelligence technologies. The outlined workflow integrates components like avatar face and lip-sync generation, large language model-driven script creation, and image synthesis, all culminating in an automated pipeline for dynamic video production.

At the core of these systems is ffmpeg, an open-source multimedia framework, which manages the heavy lifting during video composition. Developers employ artificial intelligence models to create avatars—complete with facial features and lip movements that convincingly sync to generated speech. Scriptwriting is powered by large language models, ensuring a diversity of voices and narratives tailored to different content niches, while separate models generate background visuals or thematic images suited to the video’s theme or message.

The result is a streamlined end-to-end process that empowers content creators or automated agents to rapidly assemble rich, multimedia video experiences without appearing on camera themselves. This approach is especially attractive for marketers, educators, and entertainment producers who wish to maintain anonymity or maximize production efficiency through automation. By orchestrating these tools, teams can bootstrap robust artificial intelligence-based user-generated content platforms, opening new possibilities for faceless, yet engaging, video media.