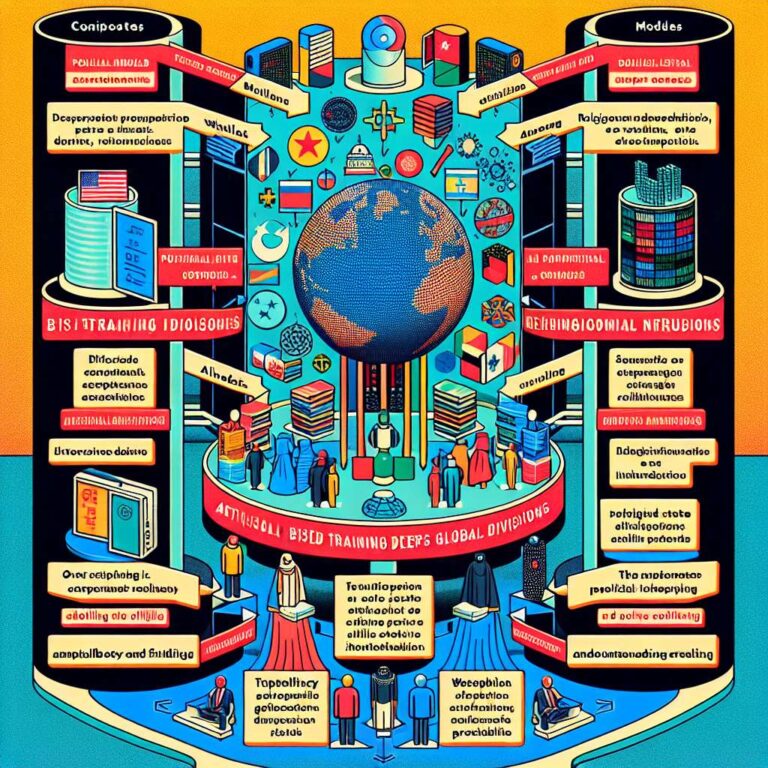

The article warns that not all Artificial Intelligence systems are built on the same foundations, and that regional ideologies embedded in training data could deepen social and geopolitical divisions. While developers in the United States and Europe prioritize fairness and bias mitigation, other jurisdictions are aligning models with religious or state doctrines. With opaque training sources, outputs risk being mistaken for objective truth, raising the stakes for trust, history, and social cohesion.

In Western markets, significant investments target debiasing through audits and diverse datasets. Yet critics argue the safety-first posture can overreach, leading chatbots to block innocuous queries and dampen creativity and access to information. The piece contends this over-correction illustrates how efforts to prevent harm can introduce their own form of restriction if not balanced with usability and openness.

By contrast, parts of the Middle East are steering Artificial Intelligence through a religious lens. In Saudi Arabia, Humain has launched a chatbot aligned with Islamic values, and the United Arab Emirates is integrating Artificial Intelligence into fatwa issuance while emphasizing adherence to Sharia. Zetrix AI’s NurAI, described as a Shariah-aligned large language model, positions itself as an alternative to Western and Chinese systems. Proponents cite cultural relevance, while detractors warn of entrenching outdated ideologies and potential clashes with global human rights norms.

China exemplifies state-controlled development, with models trained to reflect government-approved narratives. A leaked database detailed efforts to automatically detect and suppress politically sensitive content, turning passive censorship into proactive control. The article reports that these systems serve surveillance and social management, and are tied to disinformation aimed at foreign democracies. Officials in the United States have raised alarms as censorship appears to intensify with each model update, reinforcing state narratives across everyday applications.

Across regions, opaque training pipelines are a central risk. The article highlights interest in blockchain as a path to transparency, proposing hashed and timestamped datasets and auditable model lifecycles without exposing sensitive information. Advocates also call for labeling models with their ideological underpinnings so users can choose tools aligned with their values. Questions raised on X underscore the need for verifiable processes that address bias at the source, not just trace provenance.

The stakes extend from civil liberties to public health. The piece cites risks from surveillance integration and social credit systems, bias in medical models trained largely on data from wealthy nations, and political leanings in model outputs. Global regulation is accelerating, with legislative mentions up 21.3 percent across 75 countries since 2023. The United States released its America’s AI Action Plan in July 2025 to maintain leadership with fewer barriers, while the European Union’s AI Act imposes a risk-based regime, bans social scoring, restricts emotion recognition at work, and demands disclosures from general-purpose models. China’s September 2025 policies seek visible watermarking of deepfakes, tighter oversight, and alignment with socialist values, backed by a 13-point governance roadmap. The article concludes that international cooperation on transparent, verifiable training data is essential to prevent Artificial Intelligence from becoming a divider rather than a liberator.