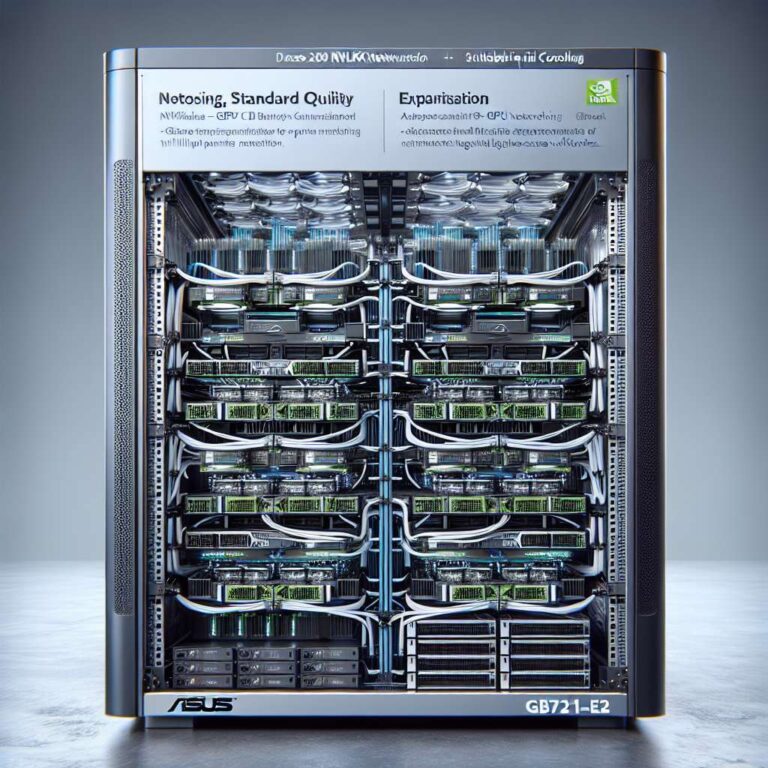

ASUS today announced the rollout of the XA GB721-E2, a rack-scale system based on the NVIDIA GB300 NVL72 platform. The chassis is aimed at large-scale model training, high-throughput inference, and advanced Artificial Intelligence and high-performance computing workloads. ASUS highlights breakthrough performance combined with sustainable liquid cooling and rack-level serviceability to support enterprises and research institutions seeking accelerated compute capacity.

At the core of the GB300 NVL72 architecture are 36 NVIDIA Grace CPUs and 72 NVIDIA Blackwell Ultra GPUs integrated within a single NVIDIA NVLink domain. That configuration is presented as delivering ultra-low-latency, high-bandwidth GPU-to-GPU communication intended for trillion-parameter workloads. The tight NVLink fabric between CPUs and GPUs is central to the system’s positioning for scale-up model training and latency-sensitive inference tasks that require extensive inter-GPU bandwidth.

Networking and expansion are supported through choice of the NVIDIA Quantum-X800 InfiniBand platform or the NVIDIA Spectrum-X Ethernet platform, together with the NVIDIA ConnectX-8 SuperNIC. ASUS and NVIDIA position the setup for cluster-scale expansion and high-throughput inference, addressing what they describe as enterprise Artificial Intelligence factory needs. The announcement emphasizes balance of raw density, thermal management through liquid cooling, and maintainability at rack scale to enable sustained, large-scale deployments in production and research environments.