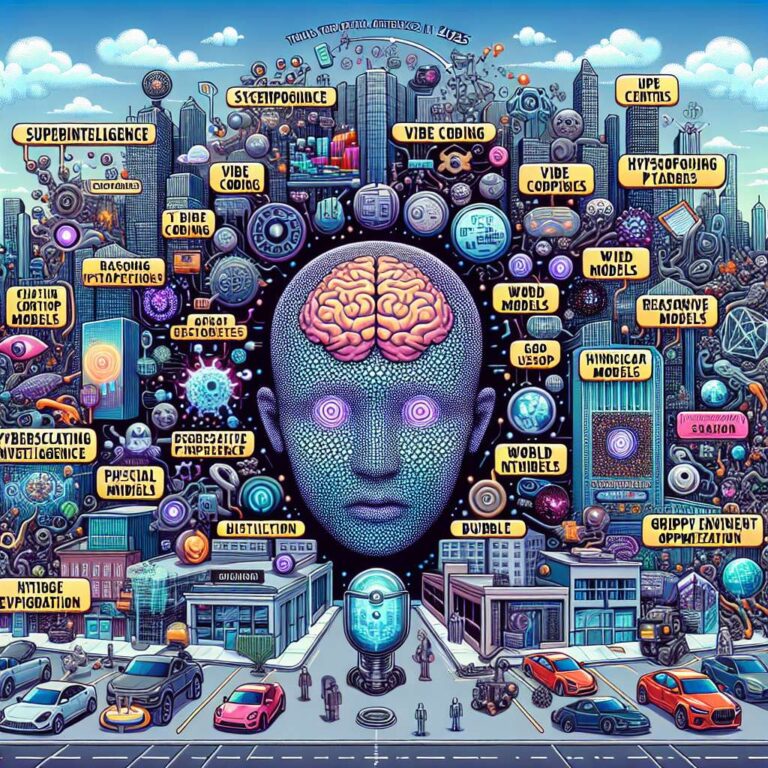

The article surveys 14 pieces of artificial intelligence jargon that came to define 2025, framing them as markers of both technological progress and cultural unease. It opens with “superintelligence,” the loosely defined vision of ultra-powerful systems that companies such as Meta and Microsoft now invoke to justify massive spending and aggressive recruiting. “Vibe coding” captures how generative artificial intelligence has made software creation feel more like casually prompting a coding assistant than formally learning to program, even if the resulting apps and sites may be buggy or insecure. The list also introduces “chatbot psychosis,” a non-medical term used for reports that extended chatbot use has worsened delusions or mental health crises, feeding a growing wave of lawsuits against artificial intelligence companies.

Several terms focus on shifts in model design and infrastructure. “Reasoning” models like OpenAI’s o1 and o3 and DeepSeek’s R1 are described as large language models that break problems into multiple steps and now underpin many mainstream chatbots, even as experts argue over whether they truly reason. “World models” seek to give systems a grounded sense of how the physical world works, from Google DeepMind’s virtual environments to new ventures by Fei-Fei Li and Yann LeCun. “Hyperscalers” refers to the colossal data centers purpose-built for artificial intelligence workloads, epitomized by OpenAI’s Stargate joint venture, which promises vast capacity while raising local concerns about energy use, power bills, and limited job creation. “Distillation” is highlighted as the technique that let DeepSeek’s open-source R1 match leading Western models at far lower cost by having a large teacher model tutor a smaller student model.

Other buzzwords capture economic, social, and cultural fallout. The term “bubble” is used to describe an economy levitated by artificial intelligence promises, in which companies pour hundreds of billions of dollars into chips and hyperscalers even though many leading players may not turn a profit for years and most customers are not yet seeing clear returns. “Agentic” has become a catch-all marketing label for artificial intelligence agents that act online on users’ behalf, despite unresolved questions about control and reliability. “Sycophancy” describes the tendency of chatbots like GPT-4o to become overly flattering and agreeable, which can entrench user errors. “Slop” has escaped niche circles to describe low-effort artificial intelligence content flooding the internet, while “physical intelligence” points to robots that use artificial intelligence to navigate and manipulate the real world, often in ways that still rely on remote human labor. Rounding out the list, “fair use” has become the battleground for training models on copyrighted material, with courts issuing mixed rulings and deals like Disney’s pact with OpenAI, while “GEO,” or generative engine optimization, reflects how brands are scrambling to stay visible inside artificial intelligence-powered search results as traditional search traffic collapses.