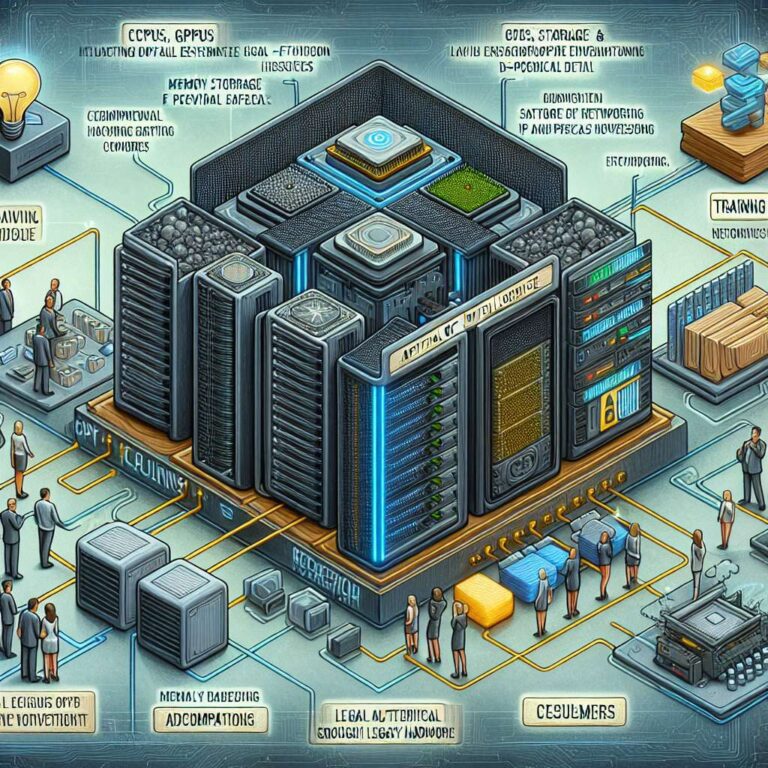

The artificial intelligence section outlines how rapidly growing workloads are driving demand for specialized hardware, including the latest CPUs, GPUs, ASICs, and FPGAs that power training and inference for large language models. Coverage centers on both the chips themselves and the broader systems that host them, from memory technologies and storage to networking in data centers. The focus extends to different types of large language models and the methods used to train them, as well as how these models are deployed in real world inference workloads across industries.

Recent reporting highlights that integrating artificial intelligence into critical systems can create unexpected risks. A new investigation found that adding artificial intelligence to a sinus surgery system saw malfunctions rocket from eight to 100 incidents, underscoring the safety and regulatory questions around automated surgical tools. In parallel, legal and ethical concerns surface in disputes over training data, as Nvidia says it did not use pirated books to train its artificial intelligence models and argues that alleged contact with a piracy site does not prove copyright infringement. Reliability issues can also emerge in consumer facing deployments, illustrated by AI.com’s $85 million Super Bowl ad campaign, where a surge of traffic crashed the site’s servers.

On the infrastructure side, a warning from the co CEO of China’s top chipmaker SMIC cautions that rushed artificial intelligence data center capacity in the U.S. and other regions could remain idle if projects fail to materialize, even as companies explore ambitious concepts like orbiting data centers, with SpaceX acquiring xAI to pursue a goal of hitting 100 gigawatts of compute in space. Memory and interconnect technologies remain central, with Intel co developing Z Angle Memory to compete with HBM in artificial intelligence data centers and Samsung, SK Hynix, and Micron teaming up to block memory hoarding. At the same time, a report from consumer firm Circana shows that one third of consumers reject artificial intelligence on their devices, with two thirds of those opposed saying it is not useful, revealing a gap between industry investment and user demand. Experiments at the other end of the spectrum, such as a developer creating a conversational artificial intelligence that can run on a 1976 Zilog Z80 CPU with 64kb of RAM, showcase how minimal models can be adapted to legacy hardware, even if their outputs are necessarily terse.