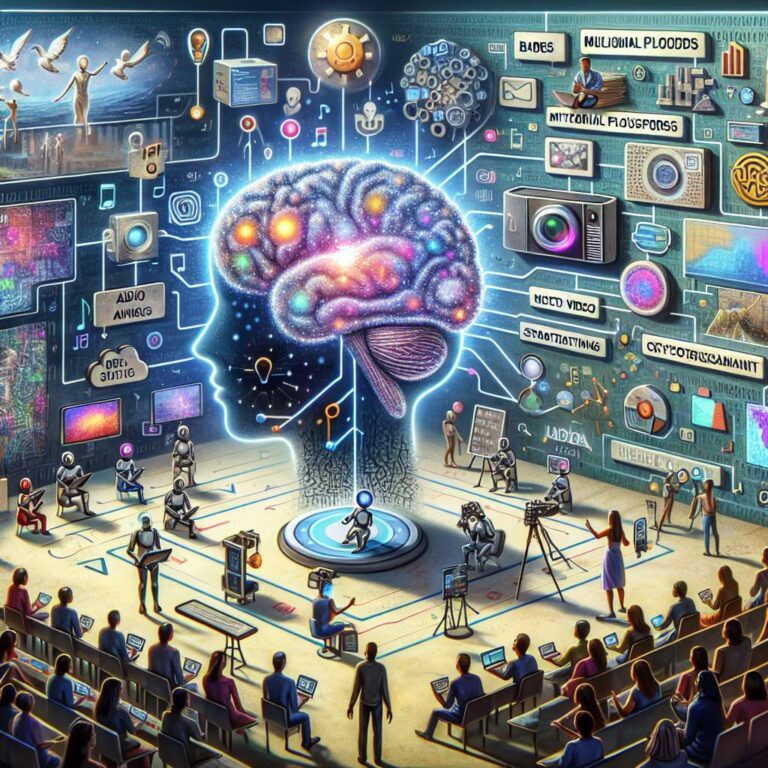

Synthetic media reached a turning point in 2025 as artificial intelligence content creation tools moved from peripheral helpers to central infrastructure for digital information and entertainment. Integrated, multimodal platforms replaced single-purpose systems, allowing a single prompt to generate text, video, audio, avatars, and music in one coordinated workflow. These environments model relationships between narrative, visuals, pacing, and sound, so a request for a thriller sequence can yield a sharp, staccato score and fast-cut storyboard, while a nature piece receives soft, ambient audio and slower editing. The collapse of traditional content silos is forcing creative teams to rethink workflows, roles, and the boundaries between disciplines.

Hyper-personalization has become the default across media and marketing. Artificial intelligence tools now rely on extensive user data, governed by increasingly formal consent frameworks, to assemble content for an audience of one. News outlets generate multiple versions of the same story so a data-focused reader receives visualizations and technical detail, while others see simplified analogies and interactive explanations. E-learning content adapts difficulty, pacing, and modality to individual progress and preferences. In marketing, artificial intelligence systems generate thousands of ad variants, conduct A/B tests in micro-seconds, and automatically deploy winning creatives to narrow demographic segments, fragmenting the idea of a single mass audience into billions of micro-segments.

Ethical and governance concerns have intensified alongside these technical gains. Flawless synthetic media has made content provenance a central issue, driving coalitions of technology firms, media organizations, and governments to push watermarking and cryptographic authentication standards that certify artificial intelligence generated work and help protect intellectual property in a world of infinite remixing. Bias mitigation has grown more systematic, with independent ethics boards using adversarial testing and new “bias dashboards” to surface representational problems and suggest more inclusive alternatives. Regulation is beginning to mandate clear disclosure of artificial intelligence generated content, especially in news and political advertising. At the same time, human creators are repositioning themselves as strategists, curators, and directors who define concepts, prompts, and brand narratives while delegating repetitive production to machines.

Across industries, the tools are reshaping production. Software teams use artificial intelligence to draft entire modules and documentation, compressing development cycles. Journalists lean on automated data mining, templated first drafts for routine financial or sports coverage, and real-time fact checking so they can invest more time in investigative work. In film and gaming, synthetic media generates textures, 3D assets, and character animation, allowing independent studios to approximate the production values of major players and narrowing the visual gap between indie and blockbuster projects. Looking ahead, research is steering toward systems that factor emotional intelligence into content generation, adjusting tension, humor, and story beats based on biometric feedback such as heart rate or facial expressions, and extending generative design into products, buildings, and molecular structures. The overarching trajectory frames artificial intelligence not as a replacement for human creativity but as a partner that amplifies imagination, while raising an ongoing challenge to guide this power toward strengthening truth, aesthetics, and human connection.