From family to rivals: the peak artificial intelligence chip showdown between Lisa Su and Jensen Huang

Under Lisa Su, AMD is challenging NVIDIA’s dominance in artificial intelligence chips after a record third quarter and major customer deals with OpenAI and Oracle. The article traces Su’s turnaround of AMD, her engineering-led strategy and how MI300 chiplets and software efforts position the company as an alternative to NVIDIA.

He’s been right about Artificial Intelligence for 40 years. Now he thinks everyone is wrong

Yann LeCun, a founder of core neural network methods and long-time chief Artificial Intelligence scientist at Meta, is increasingly at odds with the industry’s focus on large language models and may leave to build a startup around world models. His move could redraw research priorities and influence energy and sustainability trade-offs in computing.

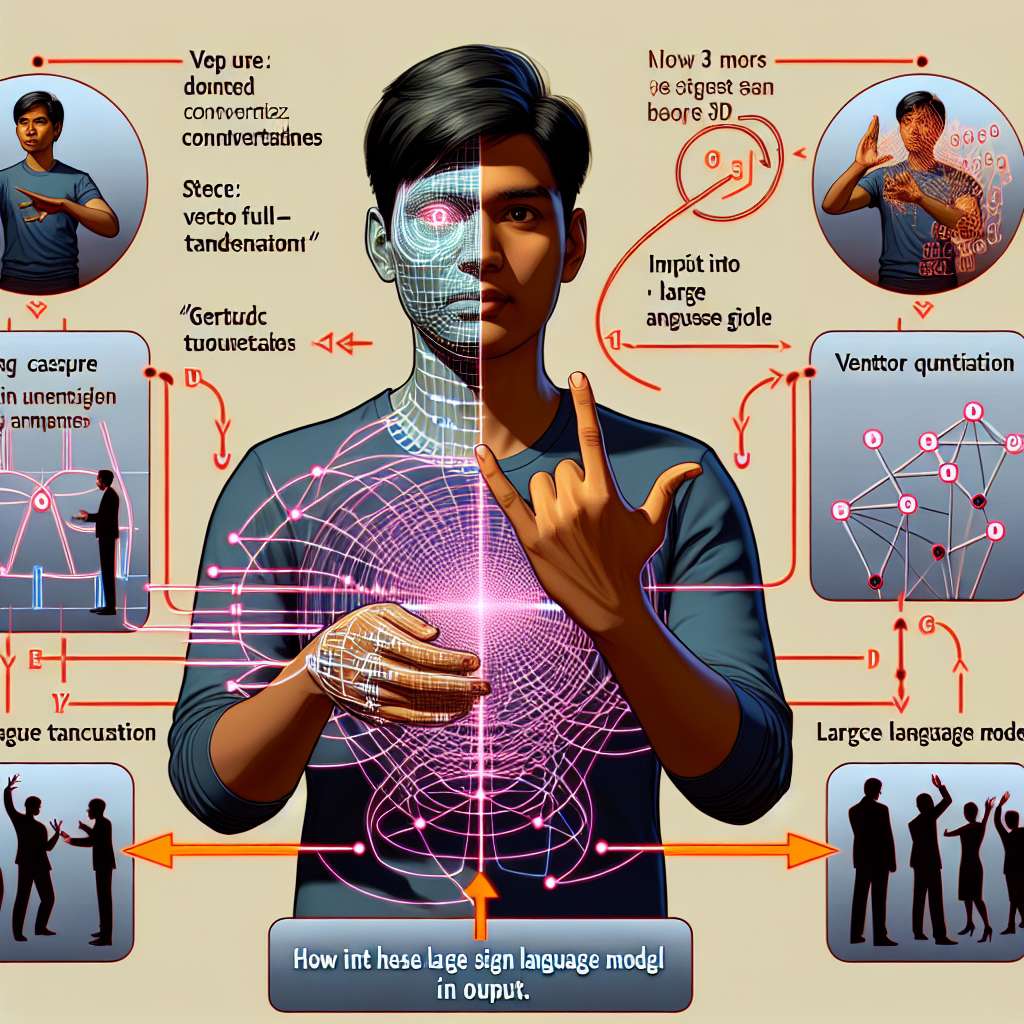

Large sign language models translate 3D American Sign Language

Researchers at Rutgers University and Qualcomm introduce a Large Sign Language Model that translates three-dimensional American Sign Language into English text by combining motion encoding with a large language model.

Artificial intelligence roundup: ChatGPT, companies and controversies

NBC News’ Artificial intelligence hub aggregates recent reporting on chatbots, corporate moves and safety debates, from ChatGPT and Bard to OpenAI, Anthropic and regulatory proposals.

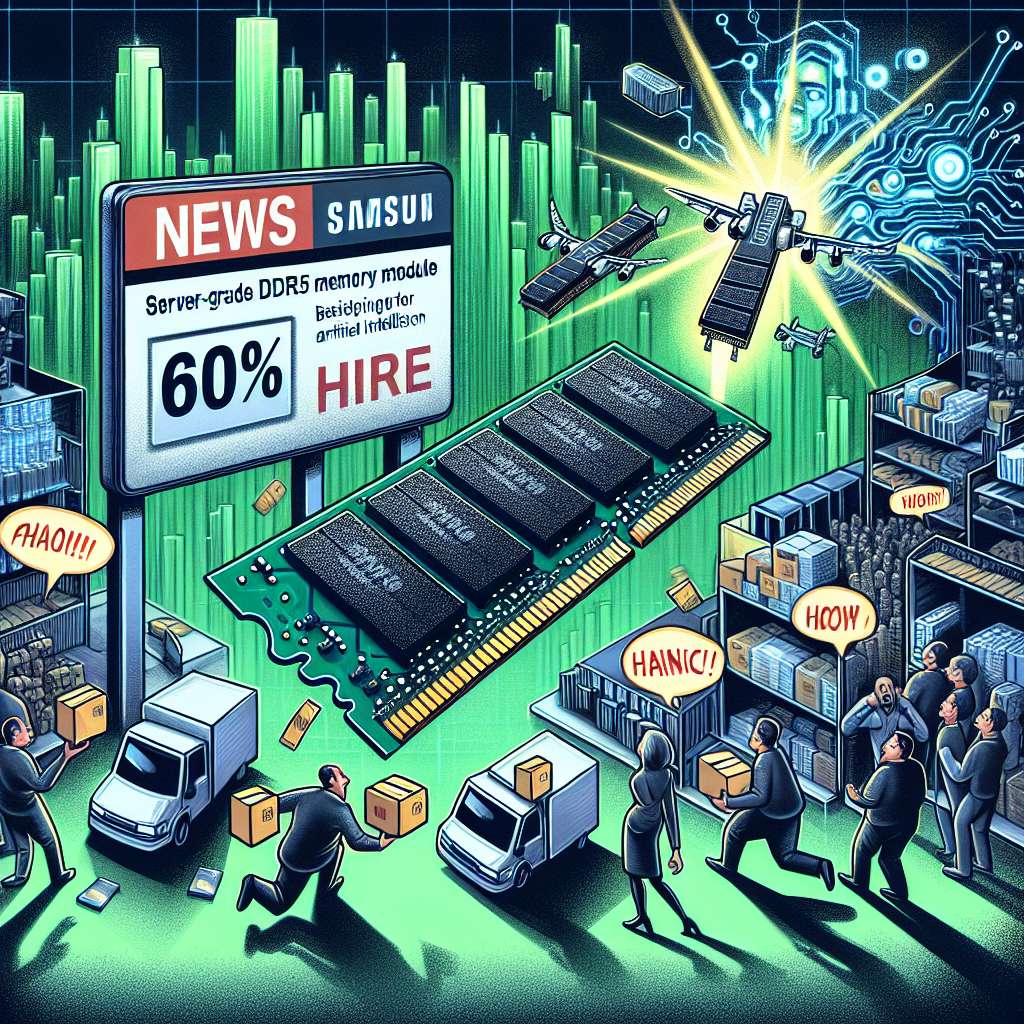

Samsung raises DDR5 prices up to 60% as demand for Artificial Intelligence data centers tightens

Samsung has raised prices for several DDR5 memory modules by up to 60% amid tightening supply as companies race to build Artificial Intelligence data centers. The move follows a delayed contract pricing update and is adding pressure on hyperscalers and original equipment manufacturers.

AMD unveils first MLPerf 5.1 Artificial Intelligence training results on Instinct MI350 series

AMD submitted the first MLPerf 5.1 training benchmarks using its Instinct MI350 Series GPUs, including the MI355X and MI350X, marking the first public Artificial Intelligence training results for the new accelerator family.

Rdma for s3-compatible storage accelerates Artificial Intelligence workloads

Rdma for S3-compatible storage uses remote direct memory access to speed S3-API object storage access for Artificial Intelligence workloads, reducing latency, lowering CPU use and improving throughput. Nvidia and multiple storage vendors are integrating client and server libraries to enable faster, portable data access across on premises and cloud environments.