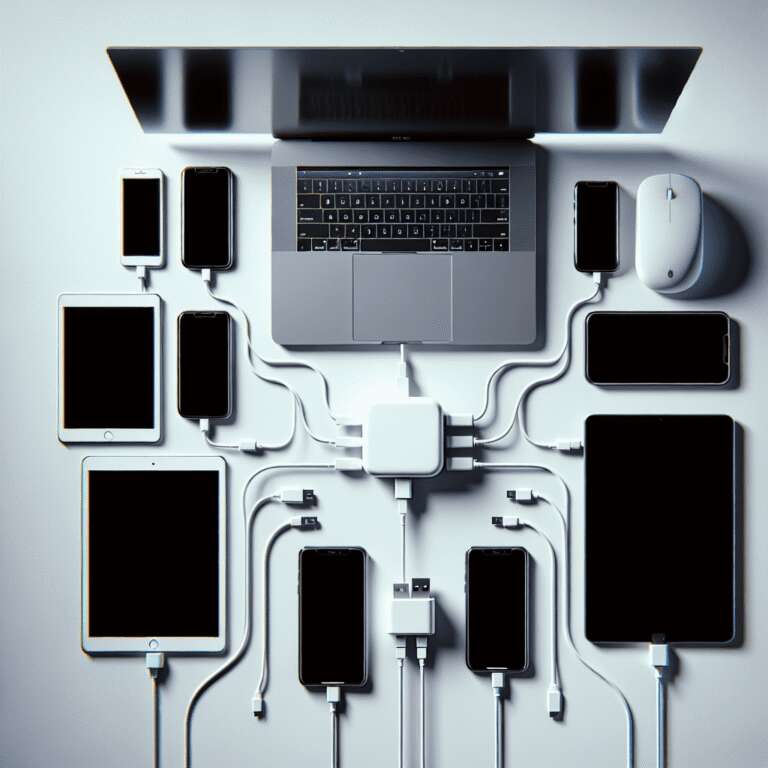

The article demystifies Model Context Protocol (MCP), a term that has been gaining traction across developer communities and discussions about modern Artificial Intelligence systems. MCP is described as a standardized protocol designed to enable large language models (LLMs) to communicate seamlessly with a wide range of external tools and services. Rather than integrating unique logic or adapters for every different application, such as Stripe, GitHub, or Slack, MCP provides a universal ´plug´—much like a USB-C connection—allowing models to interact with any supported tool in a standardized manner.

The author draws on resources like videos from Jan Marshal and various technical articles, breaking down the complexities of MCP. The comparison to USB-C highlights how MCP abstracts away the need for bespoke connectors, encouraging scalability and modularity within the Artificial Intelligence landscape. Through MCP, actions such as creating a repository, sending a message, or uploading files become standardized requests, which models can execute without tailored integrations for each third-party service.

By adopting MCP, developers and organizations can more easily extend the capabilities of their language models across different environments and tools, reducing development time and technical debt. The protocol not only simplifies tool integration for Artificial Intelligence models but also paves the way for greater interoperability, flexibility, and the possibility of dropping in new models or swapping tools with minimal friction. This shift is presented as vital for Artificial Intelligence applications that aim for extensibility and robust tool orchestration at scale.