MIT Technology Review’s latest edition of The Download spotlights an investigation into caste bias in OpenAI’s products used widely in India. The publication reports that caste bias is rampant in ChatGPT and Sora, OpenAI’s text-to-video generator. Though CEO Sam Altman touted India as OpenAI’s second-largest market during the launch of GPT-5 in August, the investigation found the new model powering ChatGPT and Sora exhibit caste bias that risks entrenching discriminatory views. This persists even as many Dalit people have advanced into professions such as medicine, civil service, and academia, and some have served as president of India. The report argues that mitigating caste bias in Artificial Intelligence models is increasingly urgent. (By Nilesh Christopher.)

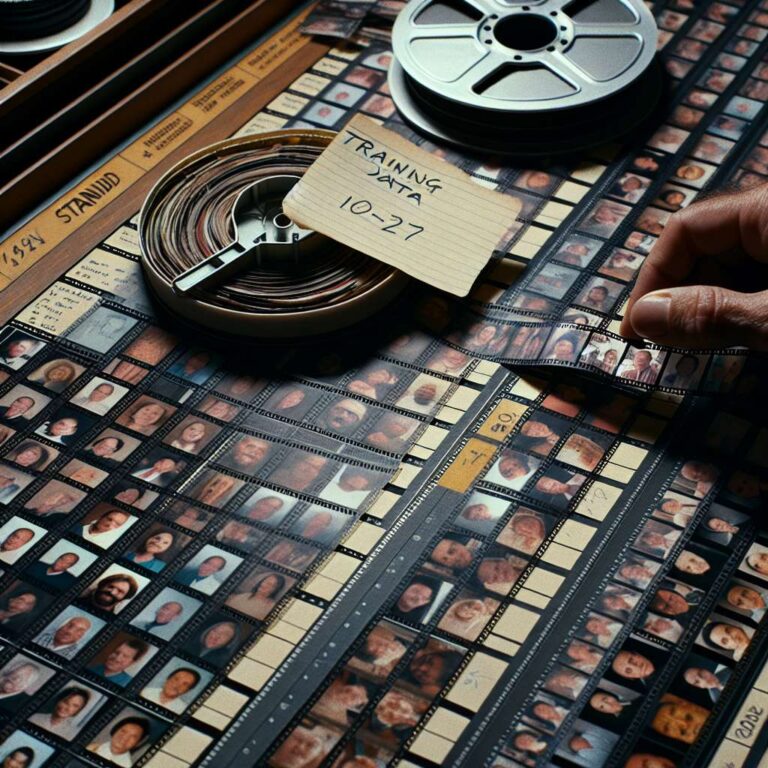

The newsletter also introduces a new MIT Technology Review Narrated podcast episode that explains how Artificial Intelligence models generate videos. It notes that 2024 has been a breakout year for video generation, but creators are contending with a flood of low-quality Artificial Intelligence content and fabricated news footage. The piece highlights the heavy energy demands of video generation compared with text or image models and directs readers to listen on Spotify or Apple Podcasts for a deeper technical walkthrough of how these systems work.

Today’s must-reads cover a broad sweep of tech news. Taiwan rejected a US request to shift 50% of its chip production to America, saying it never agreed to that commitment. A labor market study found little evidence that chatbots are eliminating jobs. OpenAI released a new Sora video app in a fresh attempt to make Artificial Intelligence social, while copyright holders will have to request removals of their content. Scientists created embryos from human skin cells for the first time, potentially expanding options for people experiencing infertility and same-sex couples. Elon Musk claims he is building a Wikipedia rival. The US chips resurgence effort has been thrown into chaos after funding was pulled from a major initiative. Immigration and Customs Enforcement seeks to buy a phone location-tracking tool without a warrant. Electric vehicle manufacturing faces headwinds, including questions about scaling solid-state batteries. DoorDash’s delivery robot is heading to Arizona’s roads. And one writer recounts what it is like to get therapy from ChatGPT.

Quote of the day: “Please treat adults like adults,” an X user says in response to OpenAI’s moves to restrict the topics ChatGPT will discuss, according to Ars Technica.

One more thing examines how Africa is confronting rising hunger by revisiting indigenous crops. While global hunger has risen, particularly in sub-Saharan Africa due to conflict, pandemic aftershocks, and extreme weather, traditional crops often provide more nutrition and are better adapted to heat and drought. The challenge is that many have been neglected by science, making them vulnerable to pests and disease and limiting yields. The piece asks whether researchers, governments, and farmers can collaborate to boost these crops so they can deliver reliable nutrition despite climate change. The newsletter closes with lighter picks, from enduring mysteries around Stonehenge to a new Björk VR experience, an explanation for will-o’-the-wisp, and a guide to building a Commodore 64 cartridge.