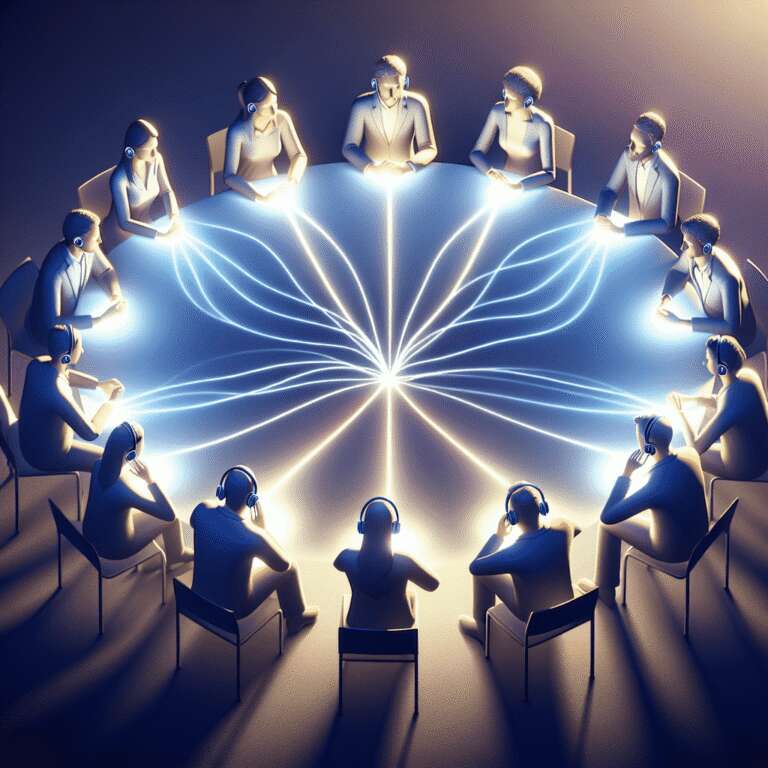

A novel headphone system called Spatial Speech Translation is enabling real-time translation of multiple speakers simultaneously, making group conversations across languages more accessible. Developed by researchers at the University of Washington, the system employs advanced neural networks to track both the spatial direction and vocal characteristics of each speaker, allowing the listener to not only understand the content but also match it with the individual speaker in real time.

The technology operates by dividing the space around the headphone wearer into small regions, identifying potential speakers and pinpointing their directions using the first Artificial Intelligence model. The second model translates speech from French, German, or Spanish into English, and then clones each speaker´s vocal characteristics—including pitch and emotional tone—so the translated output is heard in the original speaker´s style and direction, rather than as a generic robotic voice. This feature distinguishes the system from existing translation tools, such as those found in Meta’s Ray-Ban smart glasses, which only handle single voices and lack personalized vocal synthesis.

The system currently uses off-the-shelf noise-canceling headphones connected to a laptop powered by Apple’s M2 silicon chip, which is also used in the Apple Vision Pro. In recent testing environments, the technology demonstrated impressive performance, though researchers recognize the need for more diverse training data and further real-world testing. Ongoing challenges include reducing the latency between original speech and its translation—currently a few seconds—to under one second for truly fluid conversations. Language structure impacts this response time; for example, German translations are slower due to sentence construction. Balancing translation speed with accuracy remains a key hurdle as the technology matures toward more seamless multilingual group interactions.