Simon Willison´s weblog provides a detailed, ongoing chronicle of the recent advances in vision Large Language Models (vision-llms), which combine textual reasoning with the capability to interpret and generate information from images and video. Willison highlights the proliferation of powerful multi-modal models such as OpenAI’s GPT-4o, Anthropic’s Claude 3, Google’s Gemini Pro series, Meta’s Llama 4, Mistral Small 3.1, DeepSeek’s Janus-Pro, Qwen2.5-VL, and Mistral’s Pixtral Large, among others. These models are discussed in terms of both their technical progress—including context window capacities, vision reasoning, and API access—and practical use cases, such as geolocation, detailed image description, document conversion, and even real-time video understanding in applications such as ChatGPT´s new voice mode.

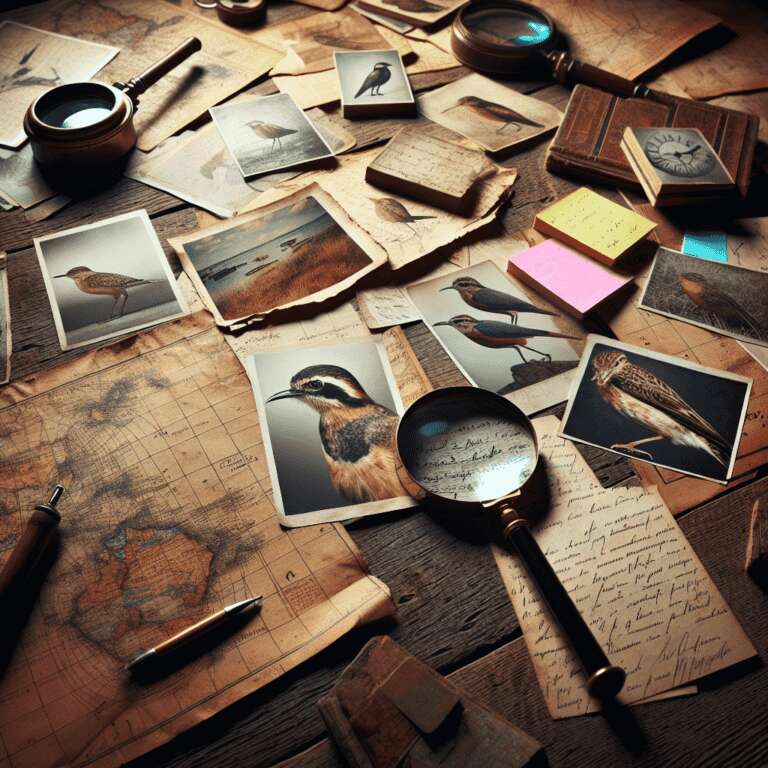

Willison regularly tests these models by running prompts against his own benchmark images—including coastal maps, historical manuscripts, and bird photography—to compare result fidelity, semantic depth, and model limitations. He explores how newer models like Pixtral Large and Qwen2.5-VL have reached and sometimes surpassed GPT-4o benchmarks for certain image recognition and reasoning tasks, while also emphasizing tradeoffs in size, required hardware, and licensing openness. Open-source options like SmolVLM and community-contributed tools for running quantized models on consumer hardware (e.g., MLX, Ollama) are explored in parallel with closed, API-only commercial offerings.

The blog further delves into specialized vision-llms applications, including OCR (with tools such as Mistral OCR and olmOCR), PDF parsing, and integration with platforms like LLM Datasette and structured output schemas for computer vision-driven workflows. Willison spotlights real-world usage, such as data journalism and digital humanities, where vision-llms are automating historically expert-driven tasks like transcribing archival materials or analyzing geographical contexts. The chronicled evolution reflects an accelerating landscape where Artificial Intelligence models are increasingly capable of nuanced visual understanding, though challenges remain regarding reliability, hallucination, and interpretability—areas Willison continually probes through experimentation, detailed comparative write-ups, and active engagement with model developers and the broader user community.