In the intensifying contest to dominate the Artificial Intelligence hardware ecosystem, most attention gravitates toward industry titans like NVIDIA and Intel. Yet, a quieter alliance is emerging—Qualcomm’s acquisition of Alphawave—which may fundamentally reshape data center architectures and challenge prevailing paradigms in server and infrastructure design.

Qualcomm, best known for its prowess in mobile processors through the Snapdragon line, extends far beyond consumer devices. Its developments, such as the Snapdragon AI platform with specialized Hexagon NPUs and the custom Oryon CPU (originating from Nuviabn), signal broader ambitions within cloud-native computing and server-grade solutions. Years of engineering for low power and efficiency now grant Qualcomm a competitive edge as power-hungry Artificial Intelligence models become dominant in enterprise data centers. With strong partnerships already established across global device makers, telecoms, and automotive, Qualcomm is well-placed to scale its presence into the datacenter landscape—if it can crack the connection bottleneck.

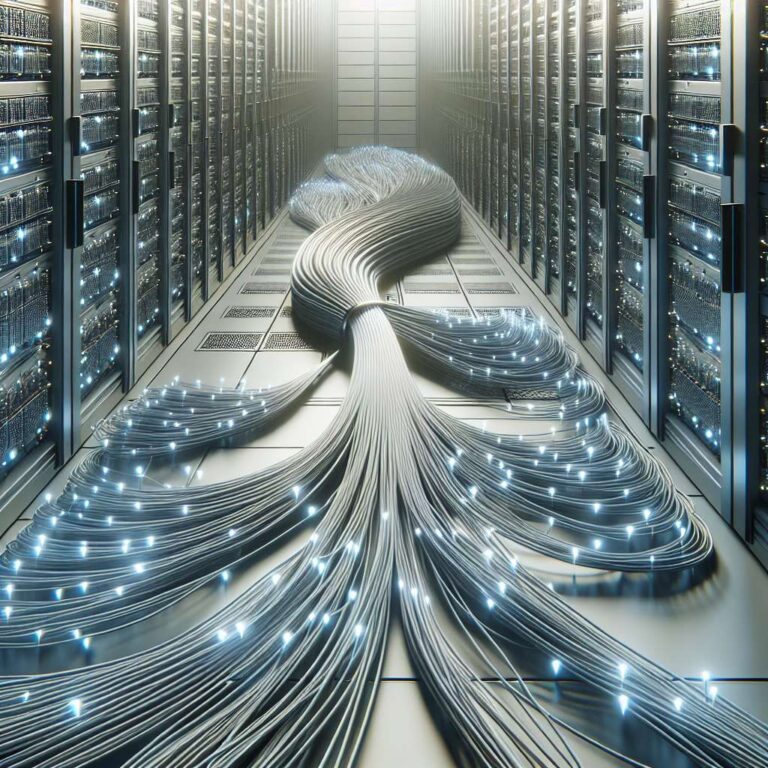

This is where Alphawave Semi enters the picture. Alphawave, while less known in public circles, builds essential infrastructure: it provides advanced SerDes and silicon photonics IP, enabling high-speed, low-latency communication between increasingly modular chiplet architectures. As computing shifts from monolithic dies to interconnected chiplets, Alphawave’s interconnect technologies allow chips to communicate efficiently, overcoming latency and data congestion hurdles. Its business model, rooted in flexible IP licensing and custom silicon offerings, caters directly to hyperscalers desiring tailored silicon accelerators.

The resulting alliance forms a potent system-level solution: Qualcomm brings the computational horsepower and efficient logic (‘the brain and muscles’), while Alphawave supplies the critical ‘nervous system’—ensuring rapid, reliable, and scalable data movement. Together, they address the current pain points: from edge-to-cloud Artificial Intelligence deployments, enabling latency-sensitive applications, to building server solutions natively compatible with the emerging chiplet era. Their fabless operational model provides agility and scalability, setting them apart from vertically integrated giants encumbered by fabrication assets. In competitive comparisons, while NVIDIA controls the vertically integrated Artificial Intelligence stack but remains energy-intensive, and Intel/AMD face inertia from legacy architectures, Qualcomm and Alphawave remain under the mainstream radar—yet their combined technology breadth positions them as a formidable next-generation contender across the industry’s most vital infrastructure layers.

This partnership’s significance lies not just in the underlying silicon but in system-level thinking, aligning with how Artificial Intelligence now pervades devices, networks, and clouds. As the Artificial Intelligence infrastructure race evolves, Qualcomm and Alphawave’s fusion may emerge as a crucial, if understated, force shaping the future of scalable compute.