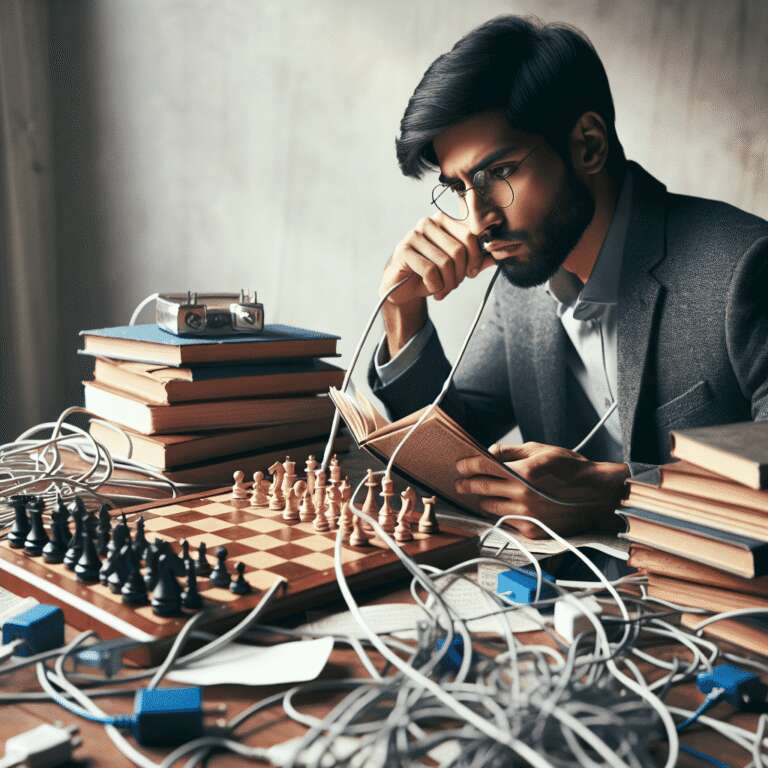

When presented with a classic mate-in-two chess puzzle attributed to Paul Morphy, OpenAI´s o3 language model spent over 15 minutes analyzing the position, attempting code-based solutions, and eventually attempting to search the internet for help—ultimately failing to solve the puzzle directly. Community members recounted how the model meticulously tried to identify board features from a screenshot, wrote Python scripts to simulate moves, and attempted to install chess libraries, only to hit technical obstacles. In the end, the model considered searching online as a last resort, a strategy that aligned more with information retrieval than genuine problem-solving.

The experiment triggered heated debates among tech enthusiasts. Some expressed disappointment at the model´s inability to brute-force or reason through a puzzle that many intermediate chess players could solve rapidly, emphasizing that fundamental algorithmic chess engines have long surpassed such tasks with ease. Others, however, highlighted the model´s general capabilities: o3 was not specifically designed as a chess engine but as a broad general-purpose system that can interpret images, understand natural language prompts, attempt programmatic solutions, and interface with web resources. Several pointed out that this holistic approach—while less direct than a traditional chess engine—showcases a qualitative shift in how Artificial Intelligence systems simulate human-like processes, including learning and research.

The incident also underscored broader concerns about the nature of Large Language Models. Commenters questioned whether improved results on puzzles are due to better reasoning abilities or enhanced retrieval from training data, given that the o3 model is trained on the public internet and may have encountered many standard chess problems before. Others argued that assessing Artificial Intelligence by its performance on logic-based, deterministic puzzles misses the mark, as the frontier in language models centers on handling ambiguity, non-precise queries, and generative tasks that mimic human communication rather than raw computation. Nonetheless, the experiment rekindled the perennial debate on Artificial Intelligence´s current limits and the distinctions between mimicking reasoning and achieving authentic understanding.