At Computex 2025, NVIDIA CEO Jensen Huang delivered a keynote address that focused on the company´s advancements in Artificial Intelligence hardware, highlighted by the official introduction of the Grace Blackwell inferencing system. This new platform promises compute throughput per node that matches the performance of the 2018 Sierra supercomputer, signaling significant progress in high-performance Artificial Intelligence workloads.

The Grace Blackwell system leverages NVIDIA´s next-generation NVLink Spine interconnect, connecting 72 GB300 nodes within a single rack. According to NVIDIA, a single NVLink Spine is capable of moving more traffic than the entire global Internet, showcasing the immense bandwidth achieved with this technology. Additionally, the NVL72 rack that houses the system requires 128 kVA of power, underlining its energy demands and raw computational capabilities.

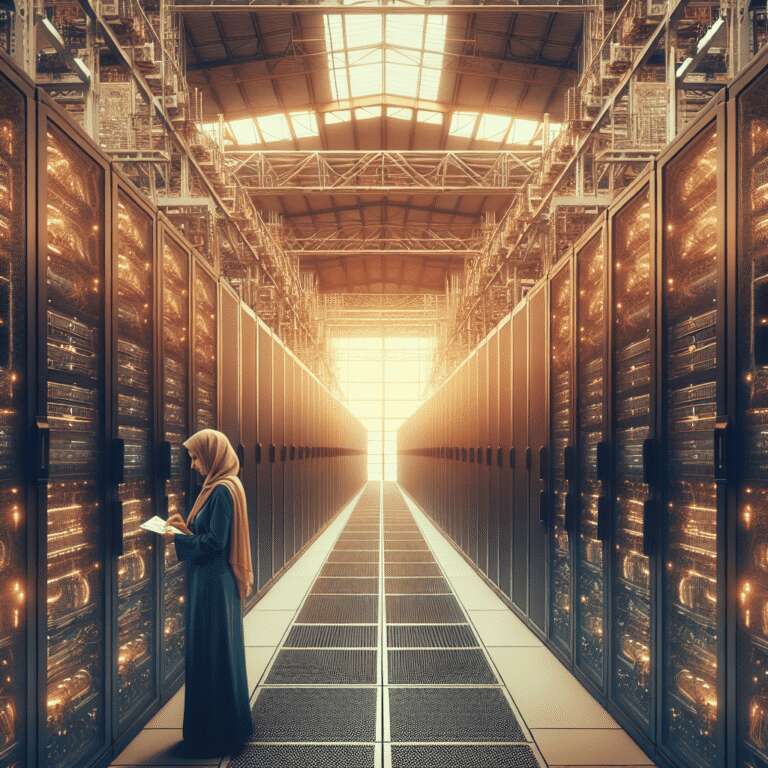

NVIDIA´s terminology shift from ´datacenter´ to ´AI factory´ was explained during the keynote, as each installation is designed to draw hundreds of megawatts of power—enough to rival the compute power of hundreds of conventional datacenters. This rebranding reflects NVIDIA´s vision for Artificial Intelligence infrastructure at an unprecedented scale. The keynote also shared insights into the advanced manufacturing process behind the Blackwell GPU, emphasizing engineering and design innovation aimed at supporting sustained, large-scale Artificial Intelligence operations.