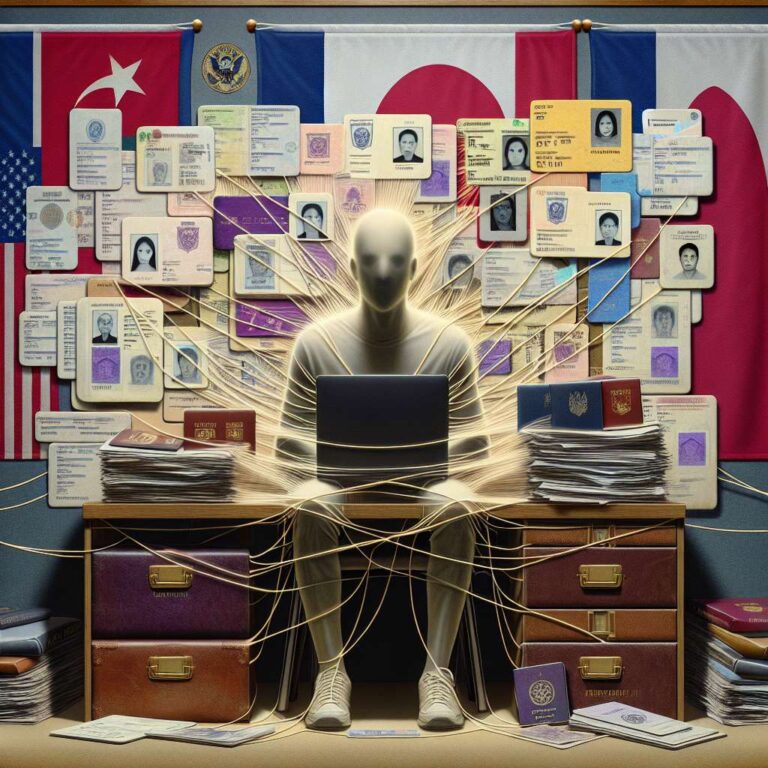

Security researchers say North Korean and Chinese actors have begun using Artificial Intelligence chatbots to streamline and scale traditional espionage techniques. South Korean cybersecurity firm Genians reported that a North Korean group known as Kimsuky used ChatGPT to draft a fake South Korean military ID attached to phishing emails impersonating a defense institution. Kimsuky has been linked to espionage campaigns across South Korea, Japan, and the US, and the US Department of Homeland Security has described the group as likely operating on behalf of the North Korean regime.

Reports from Anthropic, OpenAI, and Google show the misuse extends beyond one tool or actor. Anthropic said North Korean hackers used its Claude model to obtain fraudulent remote jobs at American Fortune 500 tech firms by generating convincing résumés, portfolios, passing coding tests, and completing technical assignments. Anthropic also documented a China-based actor that treated Claude as a full-stack cyberattack assistant, using it for code development, security analysis, and operational planning against Vietnamese telecommunications and government targets. OpenAI reported Chinese-linked abuse of ChatGPT to generate password-bruteforcing scripts, research US defense and satellite systems, create fake profile images, and produce social media posts for influence operations.

Google has similarly documented misuse of its Gemini model, saying Chinese groups used it to troubleshoot code and deepen network access while North Korean actors used it to draft cover letters and scout IT job postings; Google added that Gemini safeguards blocked more advanced abuses. Industry experts quoted in the reporting said Artificial Intelligence lowers the technical barrier for personalized phishing, deepfakes, and scam campaigns. JFrog’s machine learning operations chief warned of widespread attacks and hidden malicious code in open-source models, and Netcraft’s VP of strategy said generative tools let even unsophisticated attackers clone brands and craft flawless scam messages. OpenAI, Anthropic, and Google published findings intended to refine defenses, and did not respond to Business Insider’s request for comment on this story.