The newsletter opens by revealing that the US Department of Homeland Security is using video generators from Google and Adobe to create and edit content shared with the public, according to a new document that details which commercial artificial intelligence tools the department uses. The inventory lists applications that span tasks from drafting documents to managing cybersecurity, and it emerges at a time when immigration agencies have flooded social media with content backing President Trump’s mass deportation agenda, some of which appears to be made with artificial intelligence. The disclosure comes amid mounting pressure from technology workers on their employers to distance themselves from these agencies and publicly challenge their activities.

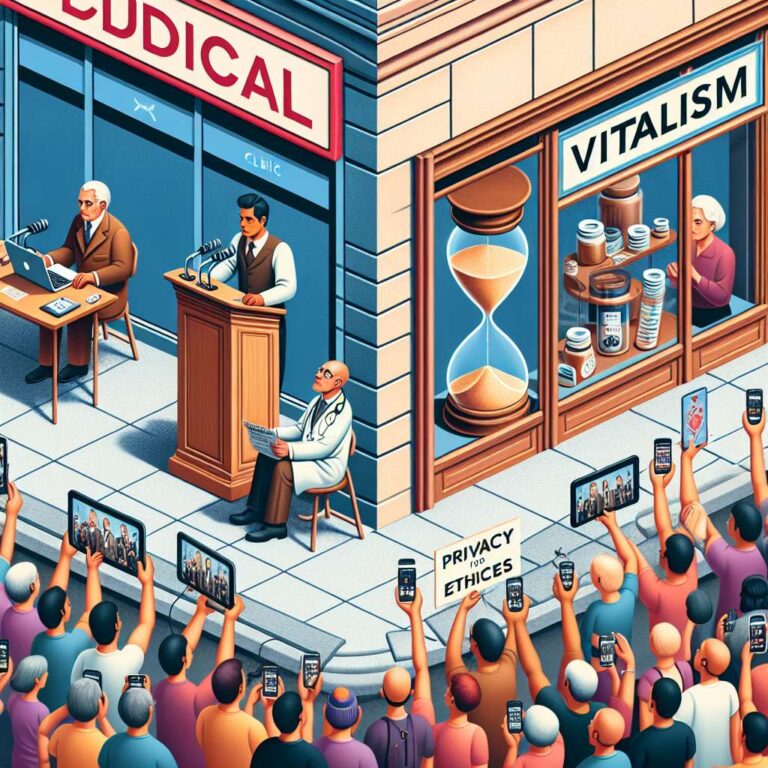

The focus then shifts to a group of hardcore longevity enthusiasts who see death as humanity’s “core problem” and argue that death is wrong for everyone, even describing it as morally wrong. They have created what they describe as a new philosophy called Vitalism, which they position as more than an abstract set of ideas. Vitalism operates as a movement that aims to accelerate progress on treatments to slow or reverse aging, not only through scientific research but also by courting influential allies, changing laws, and reshaping policies to widen access to experimental drugs. The piece notes that these efforts are starting to gain traction and that the movement is increasingly visible among people seeking radical lifespan extension.

The newsletter also highlights the artificial intelligence hype index, a recurring feature designed to separate industry reality from exaggerated claims by offering a quick snapshot of notable developments, including episodes where one system generates pornographic material and another excels at coding tasks that resemble real jobs. A curated list of must read technology stories ranges from a French company ending immigrant tracking work for US immigration enforcement to an artificial intelligence toy firm exposing children’s chats, as well as military use of generative artificial intelligence and the shutdown of an OpenAI model with very low daily usage of just 0.1% of users. The edition closes with a report on therapists secretly feeding client conversations into ChatGPT during sessions, which is eroding trust and raising serious concerns about privacy as more people discover that messages and analyses they believed were personal may have been generated or shaped by artificial intelligence systems.