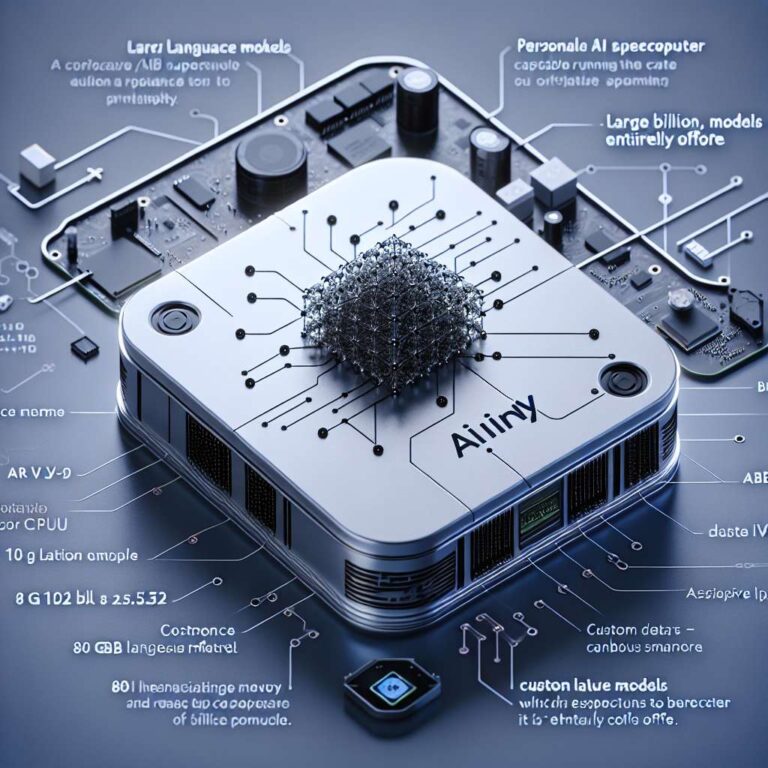

Tiiny Artificial Intelligence has publicly unveiled the Tiiny Artificial Intelligence Pocket Lab, billed as the worldu2019s smallest personal Artificial Intelligence supercomputer and officially verified by Guinness World Records under the category “The Smallest MiniPC (100B LLM Locally).” The company says the pocket-sized machine can run up-to a full 120-billion-parameter large language model (LLM) entirely on-device, without cloud connectivity, servers, or high-end GPUs, marking a shift toward fully offline, personal Artificial Intelligence.

The device is built around an ARMv9.2 12-core CPU and a custom heterogeneous module (SoC + dNPU) that Tiiny Artificial Intelligence says delivers ~190 TOPS. Key specifications list 80 GB LPDDR5X + 1 TB SSD of memory and storage, model capacity to run up to 120B-parameter LLMs fully on-device, and power efficiency characterized by 30 W TDP, 65 W typical system power. The Pocket Lab measures 14.2 × 8 × 2.53 cm, weighs approx. 300 g, and is designed to operate within a 65 W power envelope to enable large-model performance with reduced energy and carbon footprint versus traditional GPU-based systems.

Tiiny Artificial Intelligence positions the product in the “golden zone” of personal Artificial Intelligence models, citing 10B-100B parameters as satisfying over 80% of real-world needs while also supporting scaling to 120B LLMs for more advanced reasoning. The company highlights two core software breakthroughs: TurboSparse, a neuron-level sparse activation technique to improve inference efficiency, and PowerInfer, an open-source heterogeneous inference engine with more than 8,000 GitHub stars that dynamically distributes computation across CPU and NPU. Tiiny Artificial Intelligence also offers a ready-to-use ecosystem with one-click deployment of open-source models including OpenArtificial Intelligence GPT-OSS, Llama, Qwen, DeepSeek, Mistral, and Phi, plus popular agent frameworks and OTA updates slated for release at CES in January 2026.

The announcement arrives as the broader LLM market expands: Grand View Research estimated the global LLM market size at USD 7.4 billion in 2025 and projected it would reach USD 35.4 billion by 2030, growing at a CAGR of 36.9% from 2025 to 2030. Tiiny Artificial Intelligence frames the Pocket Lab as an alternative to cloud dependency, emphasizing local data storage with bank-level encryption for persistent, private personal intelligence and quoting the companyu2019s message that the primary bottleneck is dependence on the cloud rather than raw compute.