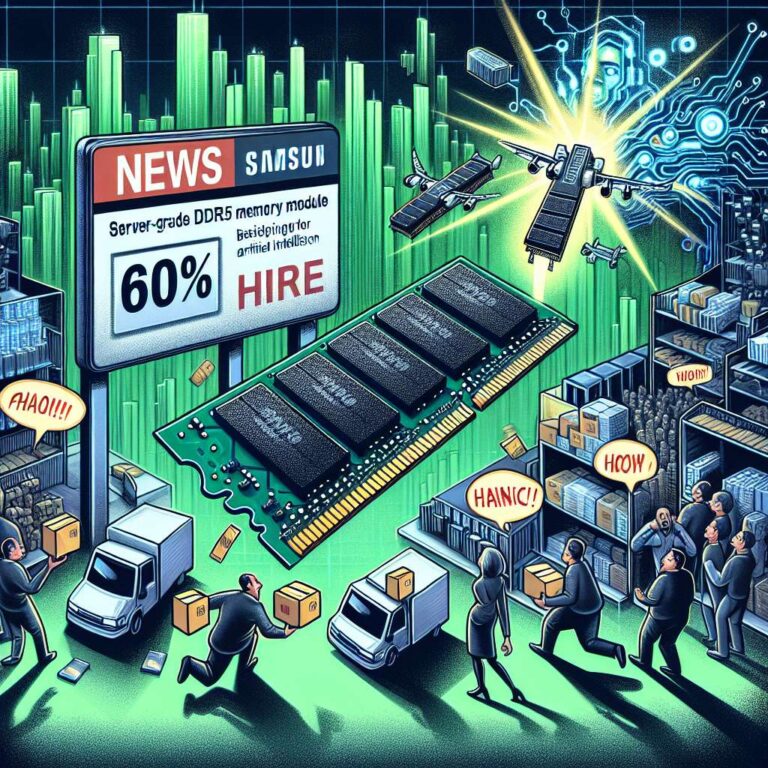

Samsung has raised prices for several server-grade DDR5 memory modules by as much as 60% this month, according to people familiar with the changes. The increases came after Samsung delayed its usual October contract pricing update and reflect tightening supply tied to the global push to build Artificial Intelligence data centers. Sources reported sharp month-to-month jumps across multiple module densities, with 16 gigabyte and 128 gigabyte modules up by about 50 percent and 64 gigabyte and 96 gigabyte modules up by more than 30 percent.

The pricing surge is straining hyperscalers and original equipment manufacturers that are already short on parts. Fusion Worldwide president Tobey Gonnerman told Reuters that many major server builders accept they will not get enough product and that premiums have reached extreme levels. The shortage has prompted panic buying among some customers and is spilling into other parts of the semiconductor market. Semiconductor Manufacturing International Corporation said tight DDR5 supply has caused buyers to hold back orders for other chip types, and Xiaomi warned that rising memory costs are pushing up smartphone production expenses.

The squeeze is strengthening Samsung’s memory division even as the company trails rivals in advanced Artificial Intelligence processors. Market tracker TrendForce expects Samsung’s contract prices to rise 40 to 50 percent in the fourth quarter, above the roughly 30 percent increase forecast for the broader market, supported by strong demand and long-term supply deals extending into 2026 and 2027. Sources indicated the imbalance in server DRAM supply is giving Samsung more pricing leverage relative to SK Hynix and Micron, reshaping competitiveness in the memory market as data center buildouts accelerate.