Red Hat is launching a revamped platform, Red Hat Artificial Intelligence 3, to help organizations move Artificial Intelligence workloads from proof of concept to production with a focus on inference. The company frames the release as an answer to stalled enterprise efforts, citing research from the Massachusetts Institute of Technology that found roughly 95 percent of organizations see no measurable financial return on enterprise Artificial Intelligence applications despite about €34.4 billion in spending. The suite includes Red Hat AI Inference Server, RHEL AI, and Red Hat OpenShift AI, and builds on the vLLM and llm-d community projects to deliver a consistent, enterprise-grade experience.

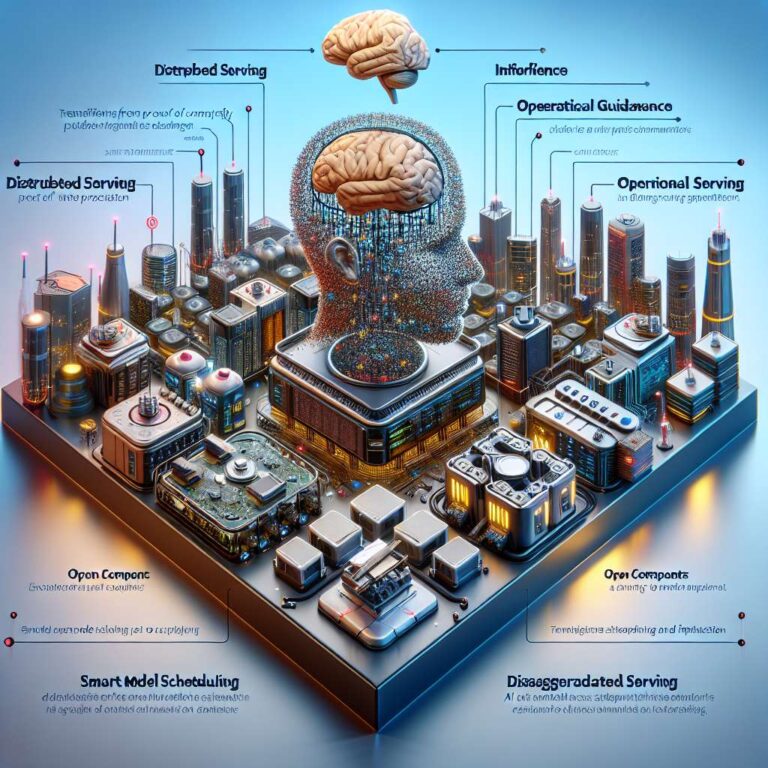

At the core is inference at scale. Red Hat OpenShift AI 3.0 introduces llm-d to run large language models natively on Kubernetes, combining intelligent distributed inference with Kubernetes orchestration. To maximize hardware acceleration, the platform leverages open source components such as the Kubernetes Gateway API Inference Extension, Nvidia Dynamo (NIXL) KV Transfer Library, and the DeepEP Mixture of Experts communication library. Red Hat says this enables cost reduction and faster responses via smart model scheduling and disaggregated serving. Operational guidance comes through prescribed “Well-lit Paths” for rolling out models at scale, and cross-platform support spans hardware accelerators from Nvidia and AMD.

The release also adds collaboration and delivery features for teams building generative applications. A Model-as-a-Service approach, built on distributed inference, lets IT teams operate as their own MaaS providers by centrally offering common models. A new Artificial Intelligence hub gives platform engineers a curated catalog to discover, deploy, and manage foundational assets, including validated and optimized generative models. For Artificial Intelligence engineers, a Gen Artificial Intelligence studio provides a hands-on environment for interacting with models, rapidly prototyping applications, and experimenting in a built-in, stateless playground.

Looking ahead, Red Hat positions the platform for the rise of agentic systems that will put heavy demands on inference. A Unified API layer based on Llama Stack aims to align with industry standards, including OpenAI-compatible large language model interface protocols. The company is also embracing the Model Context Protocol to streamline how models interact with external tools. For customization, a modular, extensible toolkit built on InstructLab supplies specialized Python libraries to give developers more flexibility and control. Together, these capabilities are intended to move enterprise Artificial Intelligence initiatives out of the experimental phase and into scalable production.