OpenAI is reevaluating its reliance on Nvidia GPUs as it looks for faster, cheaper hardware to support upcoming generative Artificial Intelligence models. Concerns around performance limitations, escalating GPU prices, and supply shortages are pushing the company to explore a broader hardware strategy that could influence infrastructure choices across the global Artificial Intelligence ecosystem. Nvidia has long dominated Artificial Intelligence computing through its Hopper and Ampere GPU lines and the CUDA software stack, and it currently controls around 80 percent of the Artificial Intelligence accelerator market, according to IDC, but intensifying demand and competitive pressure are exposing the risks of depending on a single vendor.

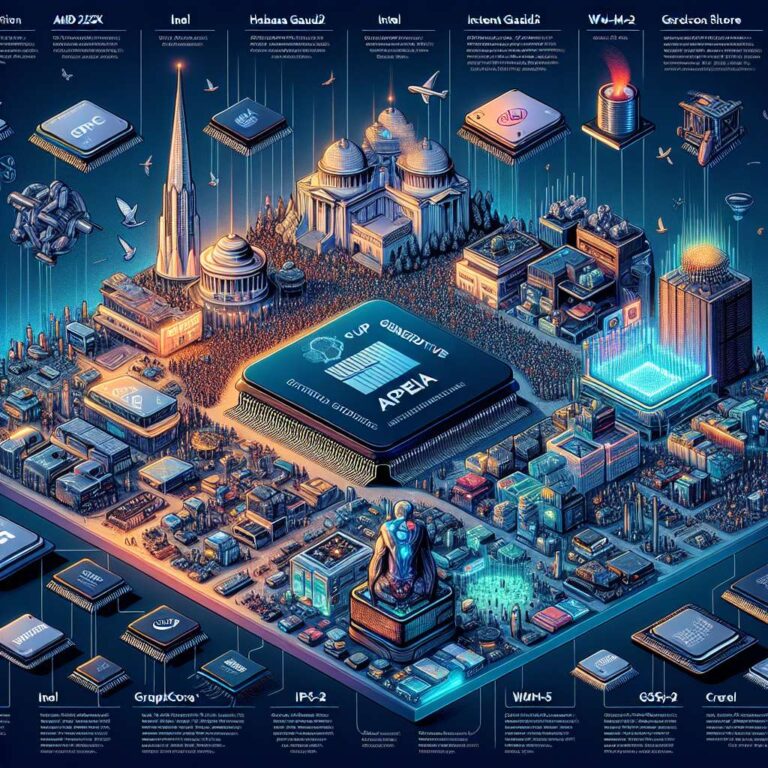

Several factors are driving OpenAI’s search for alternatives, including the rising expense of top-end chips like Nvidia’s H100, reported thermal and scalability constraints in some deployments, and ongoing component shortages that delay Artificial Intelligence development at OpenAI’s scale. Risk management is another motivator, as heavy reliance on a single supplier raises operational and geopolitical vulnerabilities. The company is weighing a mix of options from major rivals, such as AMD’s MI250X and Instinct MI300, Intel’s Habana Gaudi2 and upcoming Falcon Shores, Graphcore’s IPU-M2000, and Cerebras’s WSE-2 and CS-2. These vendors pitch benefits ranging from competitive pricing and energy efficiency to Artificial Intelligence-centric architectures and high memory bandwidth, but they also face hurdles like smaller ecosystems, lower peak throughput at scale, and demanding cooling and energy requirements.

Competitive dynamics are intensifying as alternative chipmakers build out ecosystems to attract cloud providers and developers, sometimes backed by high-profile investors such as Jeff Bezos, who has invested in Tenstorrent. The Artificial Intelligence chip industry was valued at about $15 billion in 2023 and is projected to exceed $85 billion by 2030, with a compound annual growth rate of 27.2 percent, according to Precedence Research, driven by enterprise adoption of generative Artificial Intelligence and the shift toward edge computing. Nvidia hardware has underpinned every major OpenAI model from GPT-2 to GPT-4 through Microsoft Azure, but OpenAI is increasingly focused on hardware optimization, custom accelerators, and resource-efficient computation in preparation for future systems like GPT-5. Industry analysts such as Dylan Patel and Benedict Evans view OpenAI’s exploration of non-Nvidia hardware as a strategically complex yet inevitable move toward flexibility, setting a precedent for other Artificial Intelligence developers to diversify their chip supply as workloads become more varied and distributed.