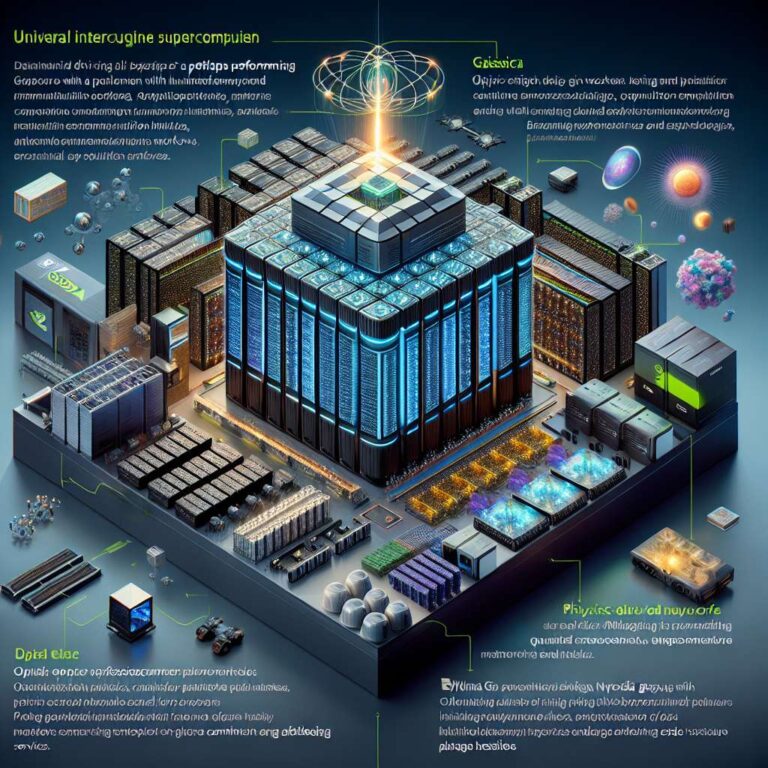

At SC25 NVIDIA presented an array of hardware and software innovations designed to drive the next phase of artificial intelligence supercomputing. Highlights included the BlueField-4 data processing unit as part of the BlueField platform to offload networking, storage and security; the compact DGX Spark system built on Grace Blackwell that delivers a petaflop of AI performance with 128GB unified memory; and NVIDIA Quantum-X Photonics InfiniBand switches that reduce power and improve resiliency for large deployments. Jensen Huang and Ian Buck framed the announcements around the demands of trillion-token models and the infrastructure required to support them.

The company introduced software and model workstreams tailored to physics and simulation. NVIDIA Apollo is an open model family for artificial intelligence physics providing pretrained checkpoints and reference workflows for fields such as computational fluid dynamics, electromagnetics and materials. NVIDIA Warp, an open-source Python framework, targets GPU-accelerated physics and simulation workflows with performance comparable to native CUDA, enabling integration with PyTorch, JAX, NVIDIA PhysicsNeMo and Omniverse. Industry partners including Siemens, Luminary Cloud and others were cited as early adopters of these tools.

Networking and storage advances were prominent. BlueField-4 combines a 64-core Grace CPU with ConnectX-9 networking and NVIDIA DOCA microservices to enable multi-tenant, zero-trust, software-defined data centers. Storage vendors DDN, VAST Data and WEKA are adopting BlueField-4 to run storage services on the DPU. NVIDIA Quantum-X Photonics switches and the Quantum-X800 platform promise higher throughput, 3.5x better power efficiency and improved resiliency by integrating optics on the switch. TACC, Lambda and CoreWeave plan early integrations.

Bridging classical and quantum compute, NVQLink was shown as a universal interconnect linking NVIDIA GPUs to quantum processors and enabling CUDA-Q workflows. Quantinuum’s Helios QPU integration achieved real-time qLDPC decoding with about 99% fidelity versus 95% without correction and 60 microsecond reaction times. Partnerships and regional deployments were described, including new RIKEN GPU-accelerated supercomputers for Japan and Arm’s adoption of NVLink Fusion to extend coherent, high-bandwidth interconnects for Arm Neoverse servers. NVIDIA also outlined the Domain Power Service and Omniverse DSX integrations to orchestrate energy use for large artificial intelligence facilities.