More than 50% of researchers have used artificial intelligence while peer reviewing manuscripts, according to a survey of some 1,600 academics across 111 countries conducted by the publisher Frontiers. Nearly one-quarter of respondents said that they had increased their use of artificial intelligence for peer review over the past year. The publisher, based in Lausanne, Switzerland, says the results confirm suspicions that tools powered by large language models such as ChatGPT have become embedded in review workflows, even as many policies caution against uploading confidential manuscripts to third-party platforms.

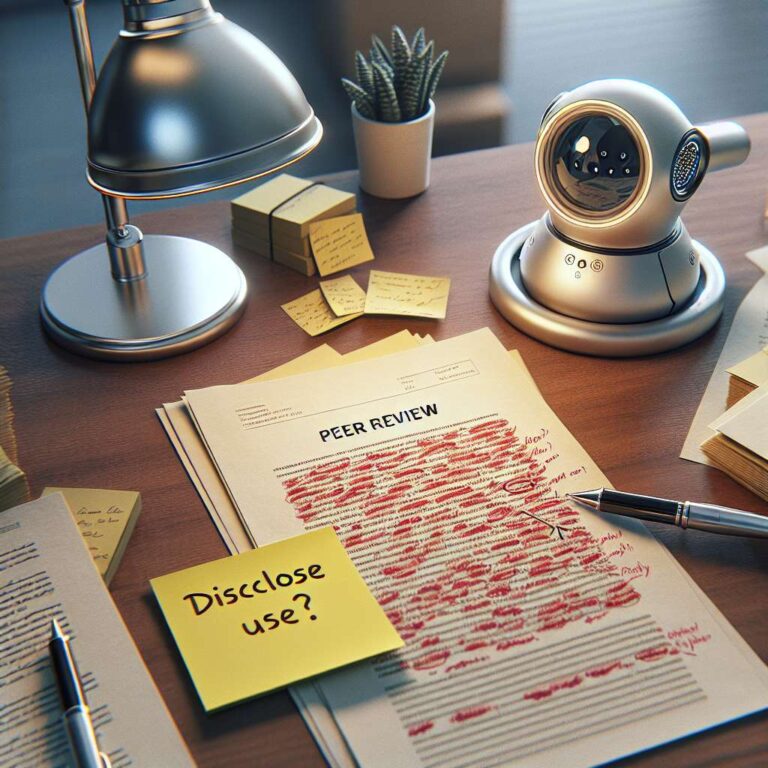

Frontiers’ director of research integrity, Elena Vicario, says the poll shows that reviewers are using artificial intelligence in peer-review tasks “in contrast with a lot of external recommendations of not uploading manuscripts to third-party tools”. Some publishers, including Frontiers, allow limited use of artificial intelligence in peer review but require reviewers to disclose it, and they typically forbid uploading unpublished manuscripts to chatbot websites to protect confidentiality and intellectual property. The survey report urges publishers to adapt policies to this emerging “new reality”, and Frontiers has launched an in-house artificial intelligence platform for reviewers to use across its journals, with Vicario emphasizing the need for clear guidance, human accountability and training. A spokesperson for Wiley says the company agrees that publishers should communicate best practices and disclosure requirements, and notes that in a similar survey it found that “researchers have relatively low interest and confidence in artificial intelligence use cases for peer review”.

Among respondents who use artificial intelligence in peer review, Frontiers’ survey found that 59% use it to help write their peer-review reports. Twenty-nine per cent said they use it to summarize the manuscript, identify gaps or check references. And 28% use artificial intelligence to flag potential signs of misconduct, such as plagiarism and image duplication. Research-ethics scholar Mohammad Hosseini describes the survey as a valuable attempt to gauge both acceptance and prevalence of artificial intelligence in different review contexts. Separate experiments are probing how well large language models actually perform as reviewers. Engineering scientist Mim Rahimi tested the large language model GPT-5 on a Nature Communications paper he co-authored, trying four different prompting set-ups, from simple review instructions to adding literature to assess novelty and rigour. He found that GPT-5 could mimic the structure and polished language of a review but failed to provide constructive feedback and made factual errors, and that more complex prompts produced the weakest reviews. Another study cited in the article reported that artificial intelligence generated reviews of 20 manuscripts that broadly matched human assessments but did not deliver detailed critique, leading Rahimi to conclude that these tools “could provide some information, but if somebody was just relying on that information, it would be very harmful”.