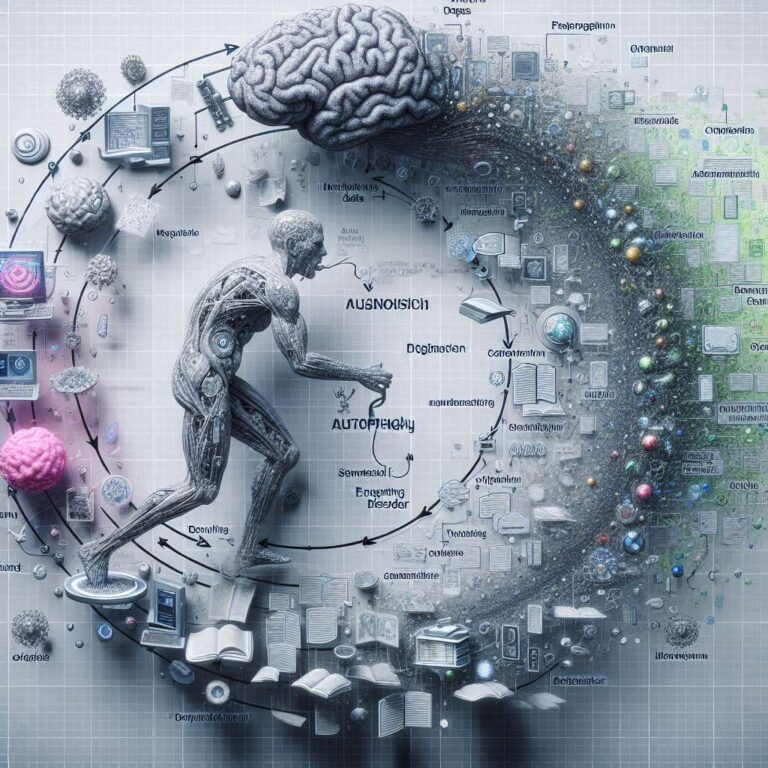

Glow New Media’s Phil Blything introduces the term Model Autophagy Disorder (M.A.D.) as a growing concern within the Artificial Intelligence community, arguing that it could become a serious problem for the large language models that dominate today’s tools. He explains that the phrase, which might sound like a biomedical diagnosis, actually describes what happens when language models start to consume and learn from their own machine generated content. In his view, this self consumption threatens the reliability and usefulness of future Artificial Intelligence systems.

Blything outlines how large language models are trained on huge corpora of text, typically drawn from the public web. He notes that large language models are trained on large chunks of language, usually, the web, and that when Artificial Intelligence systems generate web content, as they are increasingly doing, that Artificial Intelligence content is used to train a subsequent model. He points to experimental work, referenced as [1.] and [2.], showing that when successive models are trained on content produced by previous models, the resulting systems rapidly deteriorate, with the new model getting worse and failing completely back to gibberish in only a few iterations.

Drawing on his own experience “working the web” since 1997, Blything contrasts the emerging problem of Model Autophagy Disorder with the human created web of the late 1990s and early 2000s. He recalls how Wikipedia and early web content were built and curated largely by people, with few bots, limited automation and relatively high barriers to publishing that encouraged deliberation and care. Looking ahead, he predicts that the next few years will see exponential growth in large language model generated web content and stresses that this synthetic material is already and quietly feeding into the training data of tomorrow’s systems. He closes with a stark metaphor, defining autophagy as self consumption and concluding that Artificial Intelligence will increasingly eat itself.