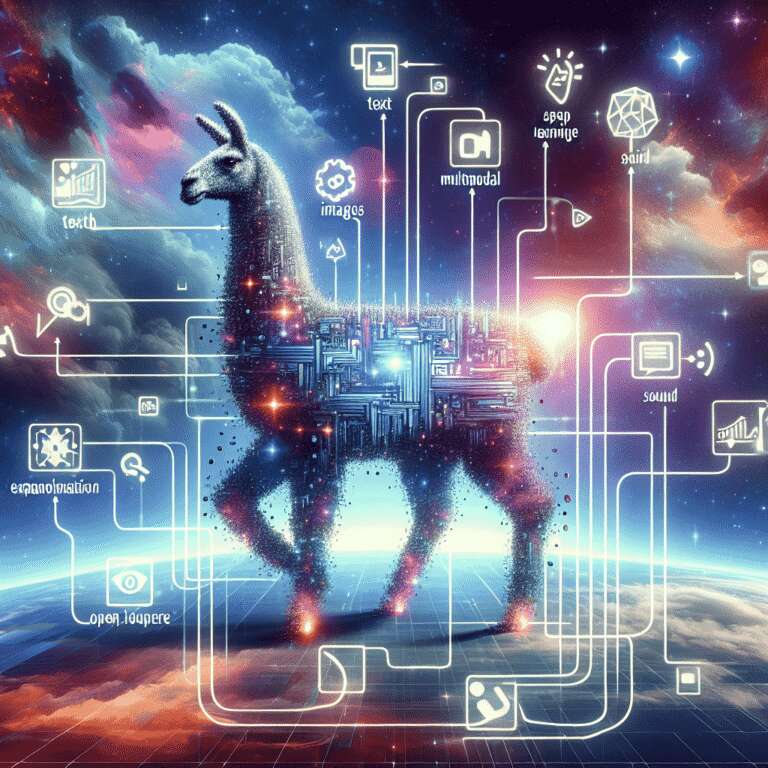

Meta has introduced new multimodal models to its Llama Artificial Intelligence suite, signaling a significant upgrade in its machine learning capabilities. The incorporation of these models aims to enhance the flexibility and application of the Llama AI, pushing the boundaries of how different types of data can be processed and interpreted by artificial systems.

The expansion into multimodal models allows for the simultaneous processing of textual, visual, and auditory inputs, offering a more comprehensive understanding of complex environments and interactions. This development follows a growing trend within the tech industry to create more holistic AI systems that can integrate diverse data sources effectively. Meta´s initiative reflects an ongoing commitment to remain at the forefront of AI innovation.

Previously, Meta´s open-source Llama models were primarily focused on textual data processing. However, the new models leverage advancements in deep learning and neural networks to enable broader functionality. This positions Meta as a key player in the competitive landscape of Artificial Intelligence, particularly in open-source platforms where accessible and collaborative development fosters innovation.