LangChain’s agents framework combines language models with tools and middleware to build systems that can reason about tasks, choose appropriate tools, and iterate until a stop condition is met, such as a final output or an iteration limit. The create_agent function constructs a graph-based runtime using LangGraph, where nodes represent steps like model calls, tool execution, or middleware, and edges define how information flows through the agent. Agents can be configured with either static models, which are defined once at creation, or dynamic models that are selected at runtime based on state and context to enable routing and cost optimization.

The model layer supports both simple string identifiers and fully configured model instances, which allow fine-grained control over parameters such as temperature, max_tokens, timeouts, and provider-specific settings. Dynamic model selection is implemented via middleware using decorators such as @wrap_model_call, which can inspect the current conversation, swap in a different model, and pass it to the handler. The framework also emphasizes tool integration: agents can call multiple tools in sequence, execute tools in parallel, pick tools dynamically based on prior results, retry on errors, and persist state across tool calls. Tools are provided as Python functions or coroutines, with the tool decorator controlling names, descriptions, and argument schemas. Custom tool error handling is implemented with @wrap_tool_call, allowing developers to intercept failures and return domain-specific ToolMessage responses back to the model.

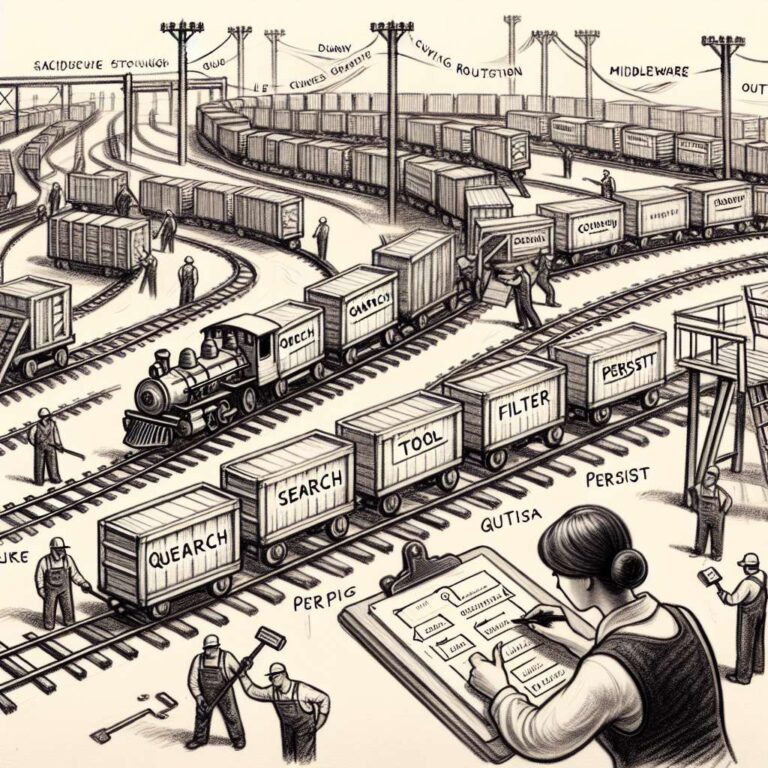

Agent behavior is governed by the ReAct pattern, where the model alternates between short reasoning steps and targeted tool calls until it can produce a final answer, illustrated through an example that searches for wireless headphones, filters results, and checks inventory before responding. The docs describe dynamic tools in two flavors: filtering pre-registered tools at runtime, for example based on user roles or permissions, and full runtime tool registration when tools are discovered from external sources, coordinated through both wrap_model_call and wrap_tool_call hooks. System prompts can be static strings or structured SystemMessage objects that leverage provider features such as Anthropic’s caching, and they can also be generated dynamically via middleware using decorators like @dynamic_prompt that adapt instructions to user roles or other context.

Invocation follows the LangGraph Graph API, with agent.invoke used to pass new messages into the agent’s state and stream used to surface intermediate steps or tokens for long-running multi-step executions. For structured output, LangChain provides ToolStrategy, which uses artificial tool calling to conform responses to a Pydantic schema, and ProviderStrategy, which uses provider-native structured output when available, with automatic selection based on the model’s capabilities. Memory is modeled as agent state, with messages always present and optional custom state defined via TypedDict-based AgentState extensions, configured either through middleware or the state_schema parameter, with middleware preferred for scoping state to specific tools and logic. Streaming support lets developers observe intermediate tool calls and messages in real time, and a rich middleware system allows pre- and post-model processing, guardrails, dynamic model selection, logging, and analytics, all without altering core agent logic.