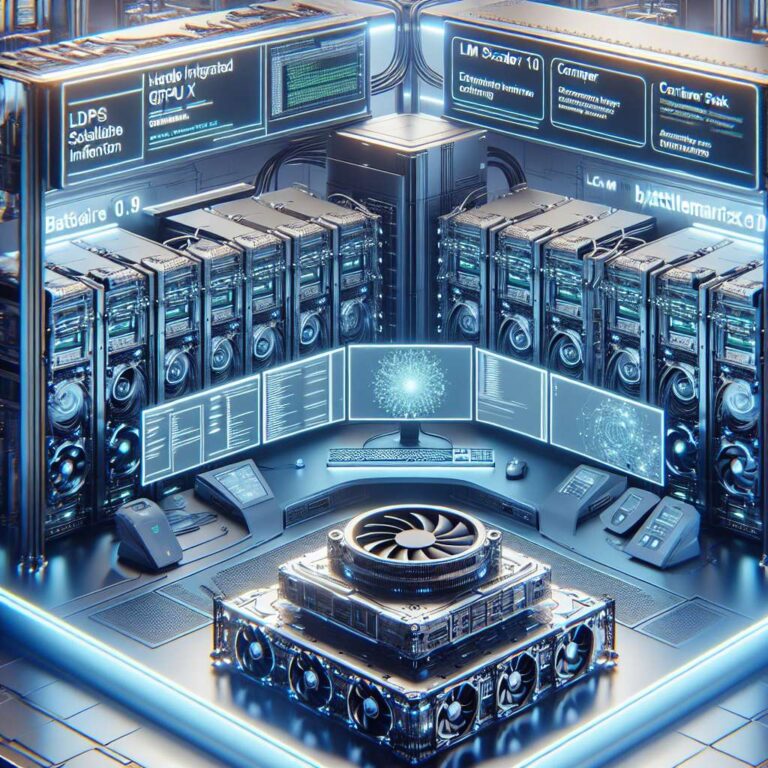

Intel has published an update on Project Battlematrix, the company´s initiative to deliver scalable, inference workstations built around Arc Pro B-series ´Battlemage´ GPUs. The effort pairs purpose-built hardware with an inference-optimized software stack that is containerized for Linux and designed to follow industry standards. The stack emphasizes end-user intuitiveness, multi-gpu scaling for larger models and sequences, and enterprise-grade reliability features such as ECC, single-root input/output virtualization or SR-IOV, telemetry monitoring, and remote firmware updates.

The headline from this milestone is LLM Scaler 1.0, a container release intended for early adoption and testing by business users. It builds on the vLLM framework and focuses on inference throughput and memory efficiency. Intel reports up to 1.8x improvement in token-per-output-per-second for long input sequences above 4k on 32B KPI models, and a 4.2x gain for 70B KPI models at 40k sequence lengths. For smaller models in the 8B to 32B range, optimizations yield about 10% higher output throughput versus prior versions. The release also brings by-layer online quantization to shrink GPU memory use, experimental pipeline parallelism support, torch.compile integration, and speculative decoding to reduce latency for some workloads.

Beyond raw performance, Battlematrix is framed as a systems play to make high-performance Artificial Intelligence workloads more accessible to developers and enterprises. The containerized approach aims to simplify deployment on Linux machines while preserving multi-gpu scale and observability. Telemetry and remote update mechanisms target operational stability in production environments; SR-IOV and ECC address isolation and data integrity for mixed workloads. Intel positions the 1.0 LLM Scaler as a practical step toward broader adoption, enabling organizations to test large-sequence inference and measure gains on their own data and pipelines.

The announcement landed alongside Computex 2025 coverage, and it underscores Intel´s focus on marrying hardware and software for inference use cases. Early adopters will likely evaluate real-world throughput, memory trade-offs from quantization, and pipeline parallelism behavior. For now, Battlematrix and LLM Scaler 1.0 offer a packaged route to bring ´Battlemage´ silicon and inference tooling into enterprise workflows, with an emphasis on performance, manageability, and standards compliance.