Infineon Technologies AG has announced a partnership with NVIDIA to develop advanced power systems for future Artificial Intelligence data centers. Their collaborative effort focuses on a new architecture featuring central power generation using 800 V high-voltage direct current (HVDC), a significant upgrade from conventional methods. This innovative strategy promises to enhance the energy efficiency of power distribution across entire data centers, supporting direct power conversion at the chip level, including individual Graphic Processing Units (GPUs) within each server board.

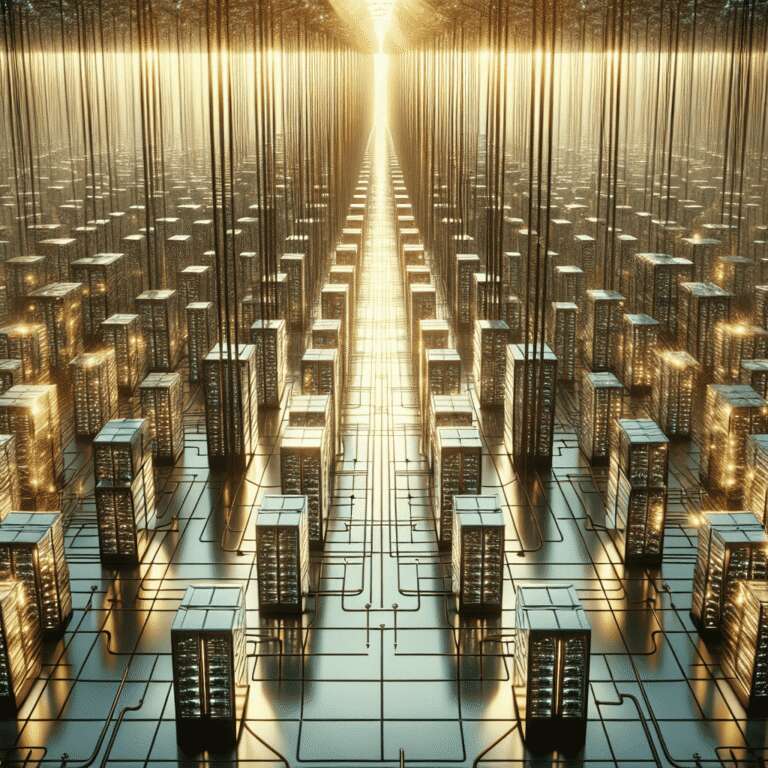

Infineon´s extensive expertise in power conversion, encompassing all key semiconductor materials such as silicon (Si), silicon carbide (SiC), and gallium nitride (GaN), is enabling accelerated progress toward a full-scale HVDC approach. This evolution is particularly timely as Artificial Intelligence data centers are rapidly scaling, now surpassing 100,000 individual GPUs per facility and demanding increasingly efficient, reliable power solutions. The HVDC system, in conjunction with high-density multiphase solutions, aims to address the growing power output needs, anticipated to exceed one megawatt (MW) per IT rack before the decade concludes.

The partnership marks a pivotal step in deploying advanced power delivery architectures tailored for accelerated computing workloads. By setting a new industry standard in high-density power distribution and component quality, Infineon and NVIDIA are positioning themselves at the forefront of a new era in data center infrastructure. Enhanced reliability and energy efficiency are expected to drive operational advancements, ultimately supporting the expansive growth and technical demands of Artificial Intelligence applications worldwide.