At the 2025 IEEE International Electron Devices Meeting, Imec presented the first thermal system-technology co-optimization (STCO) study of 3D HBM-on-GPU (high-bandwidth memory on graphical processing unit). By combining technology and system-level mitigation strategies the study shows peak GPU temperatures could be reduced from 140.7°C to 70.8°C under realistic Artificial Intelligence training workloads, a result the researchers say is on par with current 2.5D integration options. The paper highlights the value of cross-layer optimization (i.e., co-optimizing the knobs at all the different abstraction layers) together with broad technological expertise, a combination the organisation describes as unique to Imec.

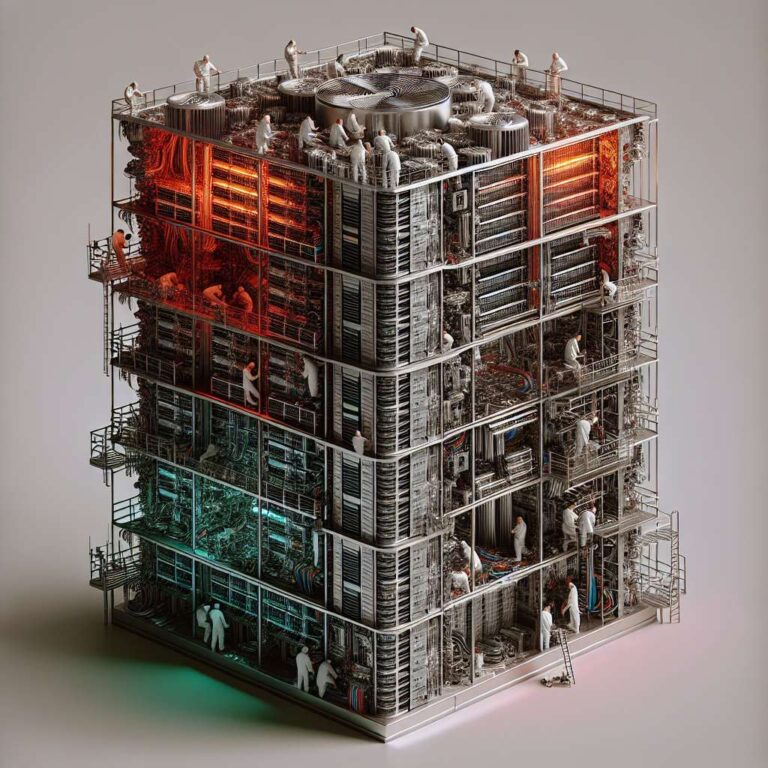

Integrating high-bandwidth memory stacks directly on top of graphical processing units is posed as an attractive architecture for next-generation compute systems targeting data-intensive Artificial Intelligence workloads. The 3D HBM-on-GPU approach promises a large increase in compute density, with four GPUs per package, as well as improvements in memory per GPU and GPU-memory bandwidth compared to current 2.5D integration approaches where HBM stacks are placed around one or two GPUs on a silicon interposer. The study frames these gains as significant for scaling performance and density in future accelerator designs.

At the same time, the report emphasises the thermal challenges introduced by aggressive 3D integration. Higher local power density and increased vertical thermal resistance make the stacked approach prone to thermal issues, which the STCO methodology seeks to address by combining system-level mitigations with technology choices. The documented temperature reduction from 140.7°C to 70.8°C under realistic training workloads serves as a concrete demonstration that coordinated, cross-layer interventions can bring 3D HBM-on-GPU thermal behaviour in line with established 2.5D solutions.